As you know, I give a lot of talks at conferences. Most of my life these days is to keep my eyes open for cool things, try them out, document them and package them up in a presentation for a conference. This does not only mean writing demos and explaining the why – it also most of the time means translating the content into a language and use cases that are meaningful to the audience.

This year is packed with amazing conferences, and I am sorry to have missed a few really cool ones and that I will miss some in the future, too. Being the only full-time evangelist outside the US for an international company brings that with it.

That is why I love when speakers make their slides available to the world and when conference organisers release the video and audio recordings of the talks. I do that myself, for the obvious reasons:

Reasons to release your slides

- People who saw me at the conference can get the slides to refresh their memories when they get back

- People can re-use the slides for internal presentations after the conference and show off to their boss about what they learnt (if they are released as Creative Commons)

- People can embed my slides in blog posts and in their own conference reviews or as a reminder to make a point

- People can search for topics on SlideShare and LinkedIn and find my talks

- People can listen to the talk on the train, in the gym or send it to people who prefer to listen to things rather than reading

- People can take in the information at their own leisure and are not bound to a timed schedule – a whole conference can be overload

- You can flip through a slide deck quickly to find the one thing you wanted to remember – this is much harder in a video or audio recording

Now, in my case, I use Keynote to write my slides, export them to PDF and host them on SlideShare. The reasons are:

- I don’t want to be dependent on technology – if my laptop breaks or gets lost I can still show my slides from another machine

- If you use a 800×600 resolution it works with even the most shoddy projectors and scales to higher resolutions without me having to do anything

- People can print the thing if they wanted to – I’ve seen people go through printouts of presentations on the train.

- SlideShare automatically creates a transcript of my slides in HTML (for SEO reasons) so even my blind friends can read them without me having to create a special version

The thing to remember though is that I write my slides to make sense without me talking over them – I use the slides as the narrative turning points in my talk. Each web site mentioned gets a URL and each code example has a live demo that people can click on and see when they get back.

This is why I can release the slide decks and people have fun with them and use them in trainings.

Not all slides are the same

Other speakers have a different approach which is more focused on the talk itself. Rather than doing a lot of speaking they write one amazing talk and fine-tune and upgrade it. The talk is a performance, and the speaker is the main source of information. That is why in talks like these, the slides become a backdrop to the main performance rather than the content. This can be very effective and needs a great stage persona to do right.

Watch the videos of Aral Balkan for example, his talk about emotional design was wonderfully timed, had the right amount of passion, emotion and fun and some very good open questions in it.

Should he release the slides? I don’t think so as without the talk and Aral’s performance they don’t make much sense. Should the video be released? Yes, please as this is where the information and the enjoyment lies. A talk like “emotional design” is not there to skip over quickly and get the gist – it is something you should watch from start to end.

The talks on Ted don’t come with slides – for a reason. The talk is the performance, without the visual and audial you’ll miss most of what makes it an outstanding talk.

What makes a great presentation?

Some people call this the only effective presentation and how a great presenter should deal with the task. Which is true to a degree but there are other things to consider.

What if you speak to an audience that doesn’t have the same language skills as you have? I found that giving talks in Asia and in continental Europe worked much better when I had an introductory sentence on my slide and went from there. People who considered me hard to understand still got the gist of what I was saying and asked very detailed questions afterwards and had friends translate for them.

What if there are people in the audience who don’t feel like hanging onto your every word? What if there are people who learn more from an image with a short text than from a long aural explanation and complex visual stimuli?

People learn in different ways. I have encountered abysmal presentations with really great content that still stuck as the slides were clear enough. I’ve seen very shy presenters who pulled through by sticking with their slides and getting better with every presentation they gave.

On the flipside I’ve seen people who read their bullet points out to the audience and bored people to death more often than I want to remember. This latter example of boardroom presentations gave content heavy slides a bad name. I don’t think though that this is fair.

When sharing slides is not helpful

The whole concept of sharing slides means full disclosure – you give out the thing that your talk was based on. If the slides are just images to accentuate what you said and there are no notes to understand what they mean then you leave your slides to interpretation by the audience – and that can go very wrong indeed.

I’ve seen people quoted out of context as the slide was meant to be delivered with a very sarcastic voice and body language. On the released deck this was missing as it doesn’t translate.

The more annoying thing to me is though when speakers write their own presentation software and expect things to work the same on the computer of their audience.

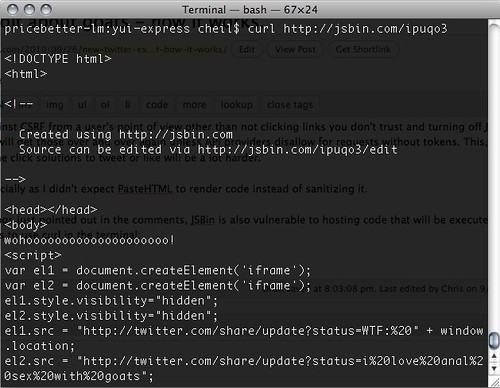

I just got very excited about the talks at JSConf in Berlin which I couldn’t attend. A lot of them were written in HTML/JavaScript and some clever NoSQL solution or another. I am sure they worked like a charm on stage and showed the power of the open technologies we have. When these break though then you are left frustrated. Firstly, because you want to get to the promised content and secondly because you want open formats to succeed.

Take Aaron Quint’s presentation based on Swinger for example. Slide 35 shows me some code:

That doesn’t look right! Ok, no worries, view-source is the key as this is HTML, right? Well, view-source gives me the main app of Swinger and not the current state. So the only way to get to this code is to use Developer Tools and do a copy of the node.

If I paste this in my editor I get the whole function:

Now, I am a geek and can do that. I also was interested enough to do it. Others would have simply given up or thought Aaron showed broken code.

Other presentations were live in a browser and expected you to have the same configuration. Paul Irish had one of those and when I complained that I can’t read the slides he claimed I was simply negative and love to cry FAIL. This is not true, I was very interested and love to see people use new technology to do good demos in browsers. However, the display of his slides did not work in my Chrome even when it advertised Chrome:

After probing a bit Paul admitted that the slides were for himself and not meant to be released. They were catered to the talk as all the demos are in-line in the HTML. I was happy that at least I could do a view-source and get all the information.

This is a case though were releasing the slides in this state (or someone releasing them and not Paul) was annoying to me as I was very excited about the cool content just to get stuck. If you can’t ensure that your audience will be able to see what you see, a screencast, video or even separating the code examples from the slides with information as to which browser to use to see the best results is much better than causing confusion.

If something gets online, people expect that to make sense and work. You promise to share your content to get all the benefits mentioned at the beginning. If your sharing means people need to change their environment to see what you did then you need a really dedicated audience. If what you prepared was meant only for you and not to be released and got out this seems to be a communication issue with the conference organisers.

In the end, all I want is to be able to follow what people want me to read. If your content only works for you or needs a special setup this is not what the open web stack is about – we want to shy away from PDF and Flash to be more flexible, not to dictate environments.