Participating in the Web of Data with Open Standards

Wednesday, March 17th, 2010These are the detail notes for my talk at the mix10 conference in Las Vegas. The description of my talk was the following:

Web development as we do it right now is on the way out. The future of the web is what its founders have planned a long time ago: loosely joined pieces of information for you to pick and choose and put together in interfaces catered to your end users. In this session, see how to build a web portfolio that is always up-to-date, maintained by using the web rather than learning a bespoke interface and high in performance as the data is pulled and cached for you by a high traffic server farm rather than your server. If you wondered how you can leave your footprint on the web without spending thousands on advertising and development, here are some answers.

The slides

The detailed notes / transcript

Welcome to the web

When I started using the web I was working for a radio station as a newscaster and producer. I’ve always dabbled with computers and connecting them world-wide and I was simply and utterly amazed at how easy it was to find information. This was 1996 and I convinced my boss at the radio station that it is of utmost importance that I get an internet access to be the first in our town to get the news from Associated Press and other very important sources. In reality, I just fell in love with the web and its possibilities.

Working as a professional web developer

I quit my job soon after that and built my first web sites. Together with some friends we maintained a banner exchange and I was admin for a few mailing lists, IRC and built a lot of really bad web sites which – back then – were the bee’s knees.

I went through the first .com boom living in a hotel in Los Angeles with all expenses paid for writing HTML.

Joining the Enterprise crew

I then worked for an agency and delivered lots of products with enterprise level content management systems. Massive systems intended to replace the inadequate systems of the past.

This is when things went wrong

For a few years I released things that were incredibly expensive and meant that the people using them had to be sent on £3K+ trainings just to be able to do the things they already did before that – only much less effective. Or did they?

Joining the corp environment (to a degree)

Again, this was a very interesting step as it meant I moved away from the large world of delivering for clients to concentrating on one single company – one of the companies that defined the world wide web as it is now and also a large player in a newly emerging world.

Hey Ho, Web 2.0 highfive

The web 2.0 came around and run rampant in the media and in the mind of early adopters. User Generated Content sounded like an awesome scam to make millions of dollars with content that comes for free – all you need to do is set up the infrastructure and find the right people.

And it went wrong again.

The main purpose of the first round of web2.0 was to be as visible as possible – doesn’t matter if all the content added by your users is really just “first” and “you’re a fag” comments – as long as the user numbers were great you won.

And now?

Makes you wonder what comes now, doesn’t it?

The web as the platform, the Mobile Web and GeoLocation

This is where we are. We can use the web as our platform, we work with virtual servers, hosted services and are happy to mix and match different services to get our UGC fix. What is missing though is the glue.

Market changes leave a track

The thing we keep forgetting though are the users. All the changes we’ve gone through bred generations of users that are happy to use what they learnt to do in their job but are not happy having to re-learn basic chores over and over again.

Time to shift down a gear

We have the infrastructure in place, we can already make this work. I get a real feeling that we are not innovating but moving the web to a mainstream media. Streaming TV without commenting, massive successes like Farmville and MafiaWars makes me realize that the web is becoming ubiquitous but that it is also boring for me as someone who wants to move it further. In order to accelerate with a car you need to shift down a gear (or kick down the accelerator with an automatic). This is the time to do so.

Finding the common denominator

What is the common denominator that drove all the innovation and movement in the past? Data. Information becoming readily available and interesting by mixing it with other information sources to find the story in the data.

Tapping into the world of data

Data is around us, it is just not well structured. People cannot even be bothered to write semantic HTML and it is tough to teach editors to enter tags, alternative text for images and video descriptions. The reason is that we lack success stories and learning our CMS takes too long. Instead teaching people how to use systems we should teach people how to structure information and simplify the indexing process rather than empowering to make things pretty and shiny – this is what other experts do better.

Why APIs work

APIs or Application Programming Interfaces are the best thing you can think of if you want to build something right now. If you remove the information from the interface – both in terms of data entry and when it comes to consumption we can build a web that works for everybody. By making information searchable and allowing filtering out-of-the-box you can scale to any size or cut down to the utmost necessary.

APIs made easy

The Yahoo Query language or short YQL is a very simple language that allows you to use the web like you would use a database. In its simplest form a YQL query looks like this:

select {what} from {where} where {conditions}Say for example you want to find photos in Flickr with the keyword donkey. The YQL statement for this is the following:

select * from flickr.photos.search

where text="donkey" and license=4The license=4 means that the photos you are retrieving and displaying are licensed with Creative Commons, which means that you are allowed to show them on your page. This is very important as Flickr users are happy to show photos but not all are happy for you to use their photos in your product. Play nice and we all get more good data.

YQL is not limited to Yahoo APIs and data – anything on the web can become accessible with it. If you want to find a flower pot in the Bay Area you can use for example Craigslist with YQL:

select * from craigslist.search where

location="sfbay" and type="sss" and query="flower pot"If you want to get the latest news from Google about the topic of healthcare, you can use:

select * from google.news where q="healthcare"If you want to collate different APIs and get one data set back, you can use the query.multi table. The following for example searches the New York Times archive, Microsoft Bing News and Google News for the term healthcare and returns one collated set of results:

select * from query.multi where queries in (

'select * from nyt.article.search where query="healthcare"',

'select * from microsoft.bing.news where query="healthcare"',

'select * from google.news where q="healthcare"'

)YQL even allows you to get information when there is no API available. For example in order to get the text of all the latest headlines from Fox News you can use YQL’s HTML table:

select content from html where

url="http://www.foxnews.com/" and xpath="//h2/a"This goes to the foxnews.com home page, retrieves the HTML, runs it through the W3C HTML Tidy tool to clean up broken HTML and filters the information down to the text content of all the links inside headings of the second level. This is done via the xpath statement //h2/a which means all links inside h2 elements and you retrieve the content instead of everything with the *.

You can then do more with this content – for example using the Google translation API to translate the headlines into French:

select * from google.translate where q in (

select content from html where url="http://www.foxnews.com/"

and xpath="//h2/a"

) and target="fr"As you can see it is pretty easy to mix and match different APIs to reach your goal – all in the same language.

YQL goes further than just reading data though. If you have an API that allows for writing to it, you can do insert, update and delete commands much like you can with a database. You can for example post a blog post on a WordPress blog with the following command:

insert into wordpress.post

(title, description, blogurl, username, password)

values ("Test Title", "This is a test body", "http://yqltest.wordpress.com", "yqltest", "password")The insert, update and delete tables require you to use HTTPS to send the information, which means that although your name and password are perfectly readable here they won’t leak out to the public – unless you are using them inside JavaScript where they would be readable.

The YQL endpoint

As YQL is a web service, it needs an endpoint on the web. For tables that don’t need any authentication this end point is the following URL:

http://query.yahooapis.com/v1/public/yql?

q={query}

&format=xml|json

&callback={callbackfunction}You can get the information back as XML or as JSON format. If you choose JSON then you can use the information in JavaScript and by providing a callback function even without any server interaction.

Benefits of using YQL

YQL is a way to use the web as a database. Instead of using your time reading up on different APIs, requesting access and learning how they work you can simply access and mix the data and you get it back ready to use a few minutes later. YQL does all this for you:

- No time wasted reading API docs – every YQL table comes with a

desccommand that tells you what parameters are expected and what data will come back for you to use. - Creating complex queries with the console. – the YQL console allows you to play with YQL and quickly click together complex queries in an easy to use interface. It also previews the information directly to you so you can see what comes back and in which format.

- Filter data before use – YQL allows you to select all the data in a certain query using the

*or specifically define what you want – down to a very granular level. In very limited application environments like mobile devices this can be a real benefit. It also means that you don’t need to spend a lot of time converting information to something useful after you requested it but even before you send it to the interface layer. - Fast pipes – YQL is hosted on a distributed server network that is very well connected to the internet. Chances are that the server is fast to reach and a few times faster than your own server when it comes to accessing API servers from all over the world.

- Caching + converting – YQL by default gives out XML or JSON which can be useful to convert any data that is on the web in another format to these highly versatile and open data formats. YQL also has an in-built caching system that only gives you new data when it is available and returns it very fast if all you need to do is request in another time.

- Server-side JavaScript – if the out-of-the-box filtering, sorting and converting methods are not enough for you you can use YQL execute tables to run the returned data through JavaScript before YQL gives it back to you. This allows for all the conversion and extension power JavaScript comes with in a safe and powerful environment as we use Rhino to run it server-side.

All in all YQL allows you to really use and access data without the hassle of resorting to XSLT, Regular Expressions, scraping and other tried-and-true but also complex ways of getting things web-ready.

Government as a trailblazer?

One thing that gets me very excited lately is governments throwing out information for us to use. Data that has been gathered with our tax money is now available for experts and laymen to play with it, look at it and see where the interesting parts are.

Conjuring APIs out of thin air

The problem is that the government data is not available for us as APIs – instead you will find it entered into Excel spreadsheets and in all kind of other data formats coming out of – yes – Microsoft products. We could now bitch about this and claim this is old school or do something about it. So how can we do this?

A few weeks ago I build http://winterolympicsmedals.com – an interface to research the history of the Medals won in the Winter Olympics from 1924 up to now. There is no API for that and this data is also not available anywhere.

What happened was that the UK newspaper the Guardian released a dataset of this information on their data blog (this is a free service the newspaper provides). The data was provided as an Excel sheet and all I did was upload it to Google Docs. I then selected “Share” and “export as CSV” which gives me the following URL:

http://spreadsheets.google.com/pub?key=tpWDkIZMZleQaREf493v1Jw&output=csv

Now, using YQL with this we have a web service:

select * from csv where url="http://spreadsheets.google.com/pub?key=tpWDkIZMZleQaREf493v1Jw&output=csv" and columns="Year,City,Sport,Discipline,Country,Event, Gender,Type"By giving the CSV some columns we can now filter by them, for example to get all the Silver medals of the 1924 games we can use:

select * from csv where url="http://spreadsheets.google.com/pub?key=tpWDkIZMZleQaREf493v1Jw&output=csv" and columns="Year,City,Sport,Discipline,Country,Event, Gender,Type" and Year="1924" and Medals="Silver"Spreadsheet to web services made easy!

This makes it easy for us to turn any spreadsheet into a web service. With Google docs as the cloud storage and YQL as the interface and doing the caching and limiting for us it is not that hard to do.

In order to make the creation of a search form and results table easier, I built a PHP script that takes this job on:

Filters';

echo $content['form'];

}

if($content['table']){

echo 'Results

';

echo $content['table'];

}

}

?>Some examples

Let’s quickly go through some examples that show you the power of YQL and using already existing free, open systems to build a web interface.

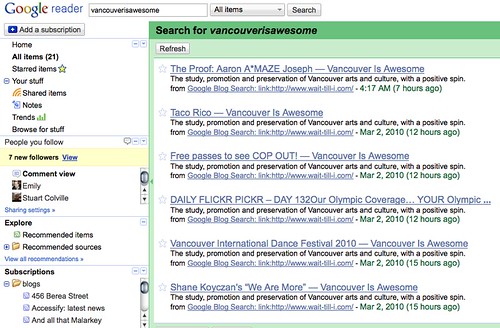

- GooHooBi is a search interface that allows you to search the web simultaneously using Google, Yahoo and Bing. You can see how it was done in a live screencast on Vimeo.

- UK House Prices is a mashup I build for the release of the UK government open data site. It allows you to compare the house prices in England and Wales from 1996 up to now and see where it makes sense to buy a place and how stable the prices are in that area.

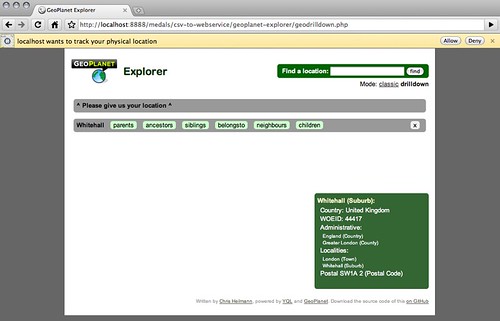

- GeoMaker is a

In summary

- We have the network and we have the technology.

- We have people who work effectively with the tools they use.

- We have a new generation coming who naturally use the internet and are happy with our web interfaces.

- If we use our efforts 50/50 on new and building APIs and converters to get the data of the old the web will rock.

Homework

As a homework I want people to have a look at the open tables for YQL on GitHub

and see what is missing. I’d especially invite people to add their APIs to the tables using the open table documentation. We want your data to allow people to play with it.

Learn more

If you want to learn more about building sites like the ones I showed with this approach, there is a Video of me talking you through the building of UK-House-Prices.com available on the YUI blog.