We are in times of turmoil in the tech world right now. On the one side, we have the AI hype in full swing. On the other, companies are heavily “healthsizing”. I just went through a round of job interviews as a prime candidate and wrote up why I didn’t take some jobs. It made me realise a few things.

Developers are worried

Things that developers worry about right now are if their jobs are safe or if they could be easily replaced by AI. They also worry about how to stay relevant as developers and not fall behind in a market that seems to churn out ground-breaking innovations by the hour. Furthermore, they worry how they should adjust their job expectations to not be part of the next round of mass layoffs.

Companies turn the screw and make developers feel that they are easily replaceable

All the big companies that developers want to work at right now flex their muscles trying to take away some of the freedoms and perks developers have been enjoying for a long time. To a degree, this is fine, as compared to other jobs we had a ridiculous amount of perks and freedoms. However, there are a few annoying things happening right now.

Companies in general implement an aggressive “back to office” policy which smacks of wanting to have more control over people rather than trusting their employees. Companies also cut costs at all cost. I’ve seen vital employees being laid off because they’ve been with the company for a long time and thus cost a lot.

Now, laying someone off in the US with a long track record isn’t that much of an issue. In Europe, however, the package you get for leaving and how many more months you get salary is connected to the amount you spent at the company. So, laying off a 10+ years employee is ridiculously expensive.

Generally, there seems to be a movement that companies try to see how far they can cut perks, salaries and benefits and yet still have top-notch employees. Often this goes hand in hand with a narrative that “AI is changing everything” and developers are not as needed as they used to be.

A quick reminder about Developer Advocacy

In my developer advocacy handbook, I defined a developer advocate as:

A spokesperson, mediator and translator between a company and its technical staff and outside developers.

So, if you are a developer advocate in a company, it is now the time to ramp up on first part – ensuring a good communication between the company and its developers. And – if need be – be their advocate in these cost-cutting negotiations.

This isn’t about the threat of AI

The argument that developers need to prove their worth to a company because of AI innovation is bogus. This isn’t about disruptive innovation. It is about ad sales plumeting and the stock market taking a punch. Companies are valued at profitability per head. So when profits go down – heads must roll.

Elon Musk with Twitter and others showed that you can act like a factory owner in the 1920s and people won’t complain and many people follow that example.

There’s also the problem that that we live in a general time of uncertainty and decline. And this is where populism thrives. The technology sector has been called by conservative and far-right spokespeople and politicians an “elite”. A job for only a chosen few, pampered millennials. So anything bad happening to the tech world is considered long overdue – we finally are also vulnerable. When the pandemic hit, the tech world was one of the few that still thrived as people needed to use the internet to communicate. When AI as a large new movement first came around, many people worried about their jobs. Now it is our turn to feel the same.

Some facts about AI and developer jobs

- AI will take jobs

- AI systems are a great way to make people more effective, thus saving the company money

- Developers should be knowledgeable about AI

- Most AI systems won’t be applicable to your company

- AI means a big change to developer careers

- Open LLMs are bad at teaching coding

Let’s go through them bit by bit and what developer advocates – or indeed anyone working in tech companies – should do about them.

AI will take jobs!

There is no doubt that some jobs will fall prey to AI now that it is much more readily available and computing power has caught up with its demands. The false narrative though is that it will replace specialist humans, experts and even highly creative jobs.

Generative AI is great at creating throwaway products. Quick prototypes, proof of concepts, an outline for an article that will need to get fact checked and re-written before publication.

This isn’t new. There has been an ongoing demand for predictable, throw-away products for quite a while that don’t really need to be done by a skilled developer or designer. In the past, these jobs could also be done with a content management system and a template. If we are honest with ourselves, even developers and designers have taken shortcuts for decades, re-using other people’s code, working with design libraries or putting together components we bought and customised.

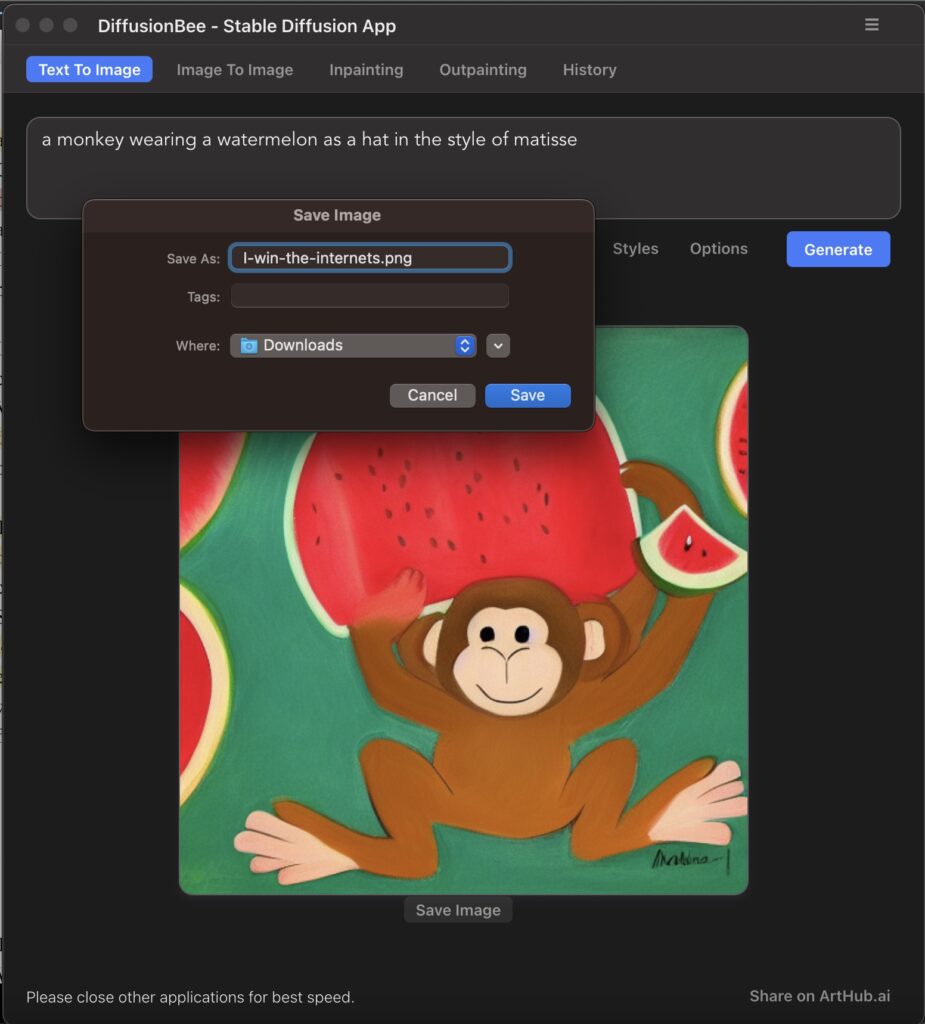

The cool new thing tech companies try to sell is that we can create a web product by asking the right questions and an AI and it will give us designs, write copy for us and even create the code.

The first two results, writing generic copy and creating a “proven successful design” are worrying as they will result in thousands of look-alike products with no personal branding or character. This has been a worry of designers for quite a while now, but now we give people the illusion of being a director who can create by asking questions and refining them. The end products will still likely look like the same product with a different colour scheme and a different logo. Bootstrapping a product with a generic design and copy is a great way to get started, but it is not a way to build a brand.

Will this replace designers who know their job? Not really as there is a lot more to good design and writing than creating one interface. Will this replace writers? Not really, as a good writer works with the brand and the media limitations instead of churning out clever sounding copy.

The last part – that AI can also create all the code needed to build a web product – is a lie. Sure, you can generate code that creates this design with this copy and maybe add some basic interaction. But a real software product isn’t one design.

It aligns itself with the needs of the user, allows for customisation and people to change the look and feel to their needs. It also needs to know where the data in the interface comes from and what to do with user interaction regardless of input device. And – even more importantly – it should never make any assumptions about the text content of the product, as that will eventually come from a CMS anyways and have to support multiple languages.

This means though that products that don’t need to adhere to these quality standards or even legal compliance needs can and should be done with a “what you see is what you get” interface. And if people are happy to start with a human question to get there – all power to them.

This kind of AI is currently taking jobs that have been nothing but frustrating to designers and developers. We all have spent far too many hours building prototypes, slideware and demos for product shows that were eventually for the bin. And we had to deal with dozens of rounds of feedback that people want “this bigger” or “a nicer font”. If these people can now annoy an AI with this kind of demands – great.

If anything, this is a good opportunity to see where you apply developer and designer resources for products that will never be released anyway and redirect them to better causes. So here’s what we should do to deal with this threat:

- Analyse what part of our company’s work could be automated with AI.

- Provide coaching for teams to use these systems to create materials that can be augmented by the development team if needed.

- Communicate with upper management that automating these things frees developer resources and provide work to be done by these teams – not replace them

Applying AI in context makes people more effective and saves the company money

Where AI systems can help is by making people more effective. This is where the “in context” part comes in. There are three areas where AI can help:

- Machine Aided Code completion

- Aiding Collaboration and Learning

- Design to code conversion

Machine aided code completion are “AI code editors” that can help you write code. They are not new, but they are getting better. The idea is that you write a few lines of code and the editor will suggest the rest. This is not just about auto-completion, but about context recognition and style recognition. The big players are GitHub Copilot and Amazon CodeWhisperer. In addition to auto-completing your code, these tools can also explain code and have granular controls like “make this code more robust” or “generate tests for this function”. GitHub Copilot also offers a ChatBot interface that will run in the context of the currently open project, thus giving your developers the power of a chat client in their code editor. This also means that generated code is automatically validated.

The longer you use these tools, the more relevant the results get. I’ve been using Copilot since its beginning and whilst it offers me results I didn’t use before, it does mimic the way I write code. This means that it is not just a tool to save time, but also a tool to learn from. And that the generated code adheres to a standard that I use. This could be incredibly powerful in a team, where you could use an editor like this to enforce a coding standard and have the editor generate code that adheres to it without months of discussion.

Another interesting idea is Copilot for pull requests which is an autocompletion for pull requests. You tell it to write a summary and a step-by-step instruction and it takes the code in the pull request to create those. This saves a lot of time and ensures that your pull requests are always up-to-date. Having no readable or actionable pull requests is a big problem in many companies and this could be a great way to solve it.

There is already evidence that using a tool like this is great for efficiency of teams. The paper The Effect of AI-Assisted Code Completion on Software Development Productivity describes a study where a team of developers used a tool like this and were 40% more efficient than a team that didn’t.

On the design front, there are some interesting plugins for Figma and other design suites available. Locofy generates React and other framework components from your designs and helps with automated tagging of those. Deque’s Design tools give designers an idea what a certain component should be like in code and how to make them accessible.

It is up to you to find out how this could boost the efficiency of your teams:

- Determine what tools your company could implement in-context and coach the teams how to use them.

- Test out and validate systems out in the market.

Developers should know about AI

There is no doubt that developers should know about AI and get an in-depth look at how these “magical” systems work that can seemingly replace them. This means that companies should now ramp up on getting their employees to understand the workings and limitations of “AI”. Five years ago I wrote a course for Skillshare called Demystifying Artificial Intelligence: Understanding Machine Learning and whilst it is of course not up-to-date with current changes it still does a good job making people understand what “thinking machines” can do and where the limits, ethical issues and technical problems are.

The big players also understand that there is a need for engineers to know the inner workings of the big AI systems. DeepLearning.ai for example has a ChatGPT Prompt Engineering for Developers course that explains how to ask the right questions and Google has a full in-depth Generative AI learning path. Microsoft has a Quickstart Guide how to build a ChatBot for your own data.

The things we should be doing now are:

- Collect up-to-date materials and courses and distribute them in the team

- Negotiate with management to get a budget for official training on AI matters

Not all AI systems will work for your company

Whilst it is great to see all the things people do with AI, it is important to understand that not all of these systems will work for your company. Interestingly enough, even the big players like Google and Amazon warn internally about using chatbots as they are worried about data leaks and the systems being used for malicious purposes. So it seems there is a huge “Do as I say, not do as I do” going on here.

Companies need to question themselves if they can allow AI systems to index their codebase, if the internal setup and dependencies can even be accessed and if they are happy to have their codebase be used to train an AI system. Other questions are about usage and compliance. Can you use code without knowing its license or knowing where it came from? How does AI generated code fit into your review and compliance processes? How can you ensure code quality and security?

Steps we need to take now are:

- Lead team members to review different tools and present them to the company followed by a discussion if the tools are applicable.

- Assess and review different AI offerings and work with leads and upper management to see what can be applied.

Our careers as developers change

The biggest change we have to deal with right now is that if we get into a world where content generation via AI is the first step, that this affects our careers. We need to be aware that we are not the ones writing the code anymore, but we are the ones reviewing it. This traditionally is the job of a senior developer, but it is now the job of all developers. We need to change our career goals and expectations. The role of a junior developer becomes a lot smaller and people need to ramp up faster when it comes to their assessing and reviewing skills. And senior developers need to do more coaching and training of juniors to spot issues in generated code rather than writing code or doing reviews of hand-written code. In essence, we are merging two career levels into one. This could be a good thing, and it could save the company money in the long run.

To make this happen we need to:

- Work with management and lead engineers to define a company strategy to make reviewing code a core skill.

- Re-assess hierarchy and career goals and expectations accordingly.

Open LLMs are bad at teaching code

There are hundreds of demos and videos where people use ChatGPT to write code, build a web site or write a game. These are a great inspiration and I am all for people learning about code in a playful and engaging manner. The problem is that this doesn’t teach us how to program. It shows us how to use a tool to generate code. This is a huge difference. Granted, some tools also come with code explanations, but none of them tell you about shortcomings of the code. None of them tell you about security issues or how to make the code more performant. None of them tell you about the license of the code or if it is even legal to use it.

This is nothing new. Developers have been copying and pasting code they don’t understand from forums and tutorials for ages, changed some numbers around and when nothing blew up, submitted it to the project. This, however, was also an incredibly dangerous thing to do.

LLMs are great to make things sound like they are easy to do. They are good at making people feel like they are great developers. But great developers know why the code they write works, not just write code that works. Even worse, bad actors already use this as an opportunity to make developers use malicious and insecure code. There have been instances where ChatGPT generated code proposed packages that were misspelled or didn’t exist and when installed, they installed malware.

The way around that is to either set up your own ChatGPT like product or limit the sources your developers can use and immediately validate the generated code. GitHub CoPilot for docs is an interesting idea to give you a ChatGPT like interface but limit it to official documentation of certain projects.

One of my favourite things about it is that it allows you to customise the level of information you want the system to display. Thus it can be used to teach a junior developer the basics, as well as remind senior developers about syntax they may have forgotten.

If used inside your editing environment, this also means that code generated with these systems will automatically be checked for syntax errors and issues.

In order to use LLMs to teach internal developers, you need to:

- Define and agree a “code hygiene” plan with the team for generated code.

- Limit results of LLMs to validated sources and internal code repositories.

- Work with management to use saved time to implement best practice trainings to learn how to validate generated code.

This is the time to fight for software development as a craft

These are some ways to counteract the “we don’t need developers, we have AI” argument whilst embracing these new ways of working in tech. I am sure there are many more and I’d love to hear your thoughts on this. Generally I think we now have the big task ahead of us to defend software development as a craft rather than a commodity. Sure, we can create a lot of stuff without any designer, writer or developer involved. But much like you can survive on microwave dishes, it is not the same as a home cooked meal.