How can we make more people watch conference videos?

Wednesday, July 26th, 2017It is incredible how far we’ve come in the coverage of events. In the past, I recorded my own talks as audio as not many conferences offered video recordings (and wrote about this as a good idea in the developer evangelism handbook). These days I find myself having not having to do this as most conferences and even meetups record talks. Faster upload speeds and simple, free hosting on YouTube and others made that possible.

And yet it is expensive and a lot of work to record and publish videos of your conference. And if you don’t make any money with them it is a bit of advertising for your event with a lot of overhead. That’s why it is a shame to see just how low the viewing numbers of some great conference videos are. When talking to conference organisers, I heard some astonishingly low numbers. The only thing to boost them seems to be to deliver them one after the other with dedicated social media promotion. Which, again, is a lot of extra effort.

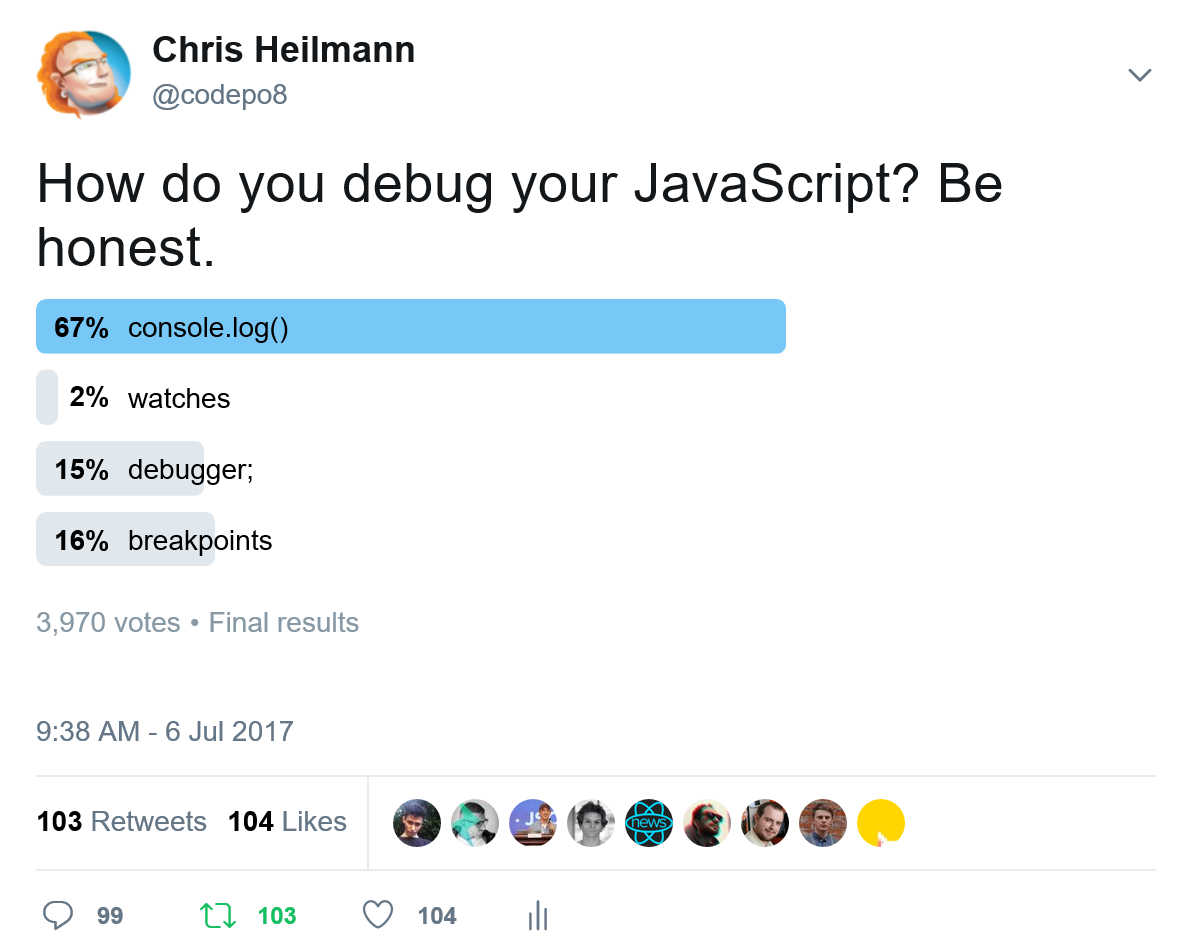

In order to see what would make people watch more conference talks, I took a quick Twitter poll:

Poll time: what would make you watch more conference videos?

— Chris Heilmann codepo8@toot.cafe (@codepo8) July 11, 2017

Here are the quick results of 344 votes:

- 29% chose shorter videos without Q&A

- 37% would like transcripts with timestamps

- 22% would like to have videos offline

- 12% wanted captions.

I love watching conference talk videos. I watch them offline, on an iPod in the gym or on planes and trains. Basically when I am not able to do anything else, they are a great way to spend your time and learn something. There are a few things to consider to make this worth my while though:

- The talk needs to make sense to watch on a small screen. Lots of live code in a terminal with a 12px font isn’t. That is not to say these aren’t good talks. They are just not working as a video.

- The talk needs to be available offline (I use YouTube DL to download YouTube videos, some publishers on Vimeo offer downloads, Channel 9 always has the videos to download)

- The talk needs to be contained in itself – it is frustrating to hear references to things I should know or bits that happened at the same event I wasn’t part of. It is even more annoying to see a Q&A session where you wait for the mic to arrive for ages or the presenter answering without me knowing what the question is.

I’ve written about the Q&A part of videos in detail before and I strongly believe that cutting a standard Q&A will result in more viewers and happier presenters. For starters, the videos will be shorter and it feels like less of an effort to watch the talk when it is 25 minutes instead of 45-50.

At technical events I am OK with some of these annoyances. After all, it is more important to entertain the audience at the event. And it is amazing when presenters take the time and effort to see other talks and reference them. However, there is a lot of benefit to consider the quality and consumption of a recording, too.

Having recordings of conference talks is an amazing gift to the community. People who can’t afford to go to events or even can’t afford to travel can still stay up-to-date and learn about topics to deep dive into by watching videos. Easy to consume, short and to the point videos can be a great way to increase the diversity of our market.

“You are here to talk to the online audience”

When I spoke at some TEDx events, this was the advice of the speaking coaches and organisers. TED is a known brand to have high quality online content. And it is almost unaffordable to go to the main TED events. Which makes this advice kind of odd, but their success online shows that there are on to something.

TED talks are much shorter than the average conference talk. They are more performance than presentation. And they come with transcripts and are downloadable.

Now, we can’t have only these kind of talks at events. But maybe it is a good plan to do some editing on the recordings and turn them into more of an experience than a record of what happened on stage that gets delivered as soon as possible. This means extra work and is some overhead, for sure. But I wonder how much of it could be automated already.

In addition to the poll results there were some other good points in the comments on Twitter and Facebook.

Less of the speaker upper body and more of the slides. Or slides to download. Also, speakers who pace themselves to sound good at 1.5x speed.

It seems to be pretty common by people who spend time watching talks to speed them up. This is an interesting concept. Good editing between slides and presenter was a wish a lot of people had. It shouldn’t be hard to publish the slides along with the video, and something presenters should consider doing more.

Not on the list but “editing” plus a solid couple of paragraphs of what the talk covers and why I should or shouldn’t watch it.

This is another easy thing for presenters to do. We’re always asked to offer a description and title of the talk that should zing and get people excited. Providing a second one that is more descriptive to use with the video isn’t that much overhead.

For English spokers, most of the conferences, no problem. But for non English spokers, massive failure. Reading is really more easy trying to listen and understand. Some guys speaks really fast. So I can’t understand talks.

This is a common problem and a presenter skill to work on. Being understandable by non-native speakers is a huge opportunity. So, some pacing and avoiding slang references are always a good idea.

The possibility to download the videos on tablets, smartphones and laptops so I can see them during commuting time

Offering videos to download should be not too hard. If you’re not planning to sell them anyways, why not?

Also offline availabilty with chapter marks/timestamps. I’ll vote for transcripts for skim reading to get to the gold nuggets. But sometimes a good speaker is an enjoyable 50min experience. I’d rather read transcripts. I never get blocks of quiet. Links to slides to follow along, or (even better) closed captions so I can play them muted If they were shorter and had a PowerPoint with main points to download after

This, of course, is the big one. A lot of people asked for transcripts, chapters and time stamps. Either for accessibility reasons or just because it is easier to skim and jump to what is important to you. This costs time and effort.

And here we have a Catch-22: if not many people watch the recordings of an event, conference organisers and companies don’t want to spend that time and effort. Manual transcription, editing and captioning isn’t cheap.

The good news is that automated transcription has gone leaps and bounds in the last years. With the need to have voice commands on mobiles and home appliances a lot of companies concentrated on getting this much better than it used to be.

YouTube has automated captions with editing functionality for free. Most cloud providers offer video insights.

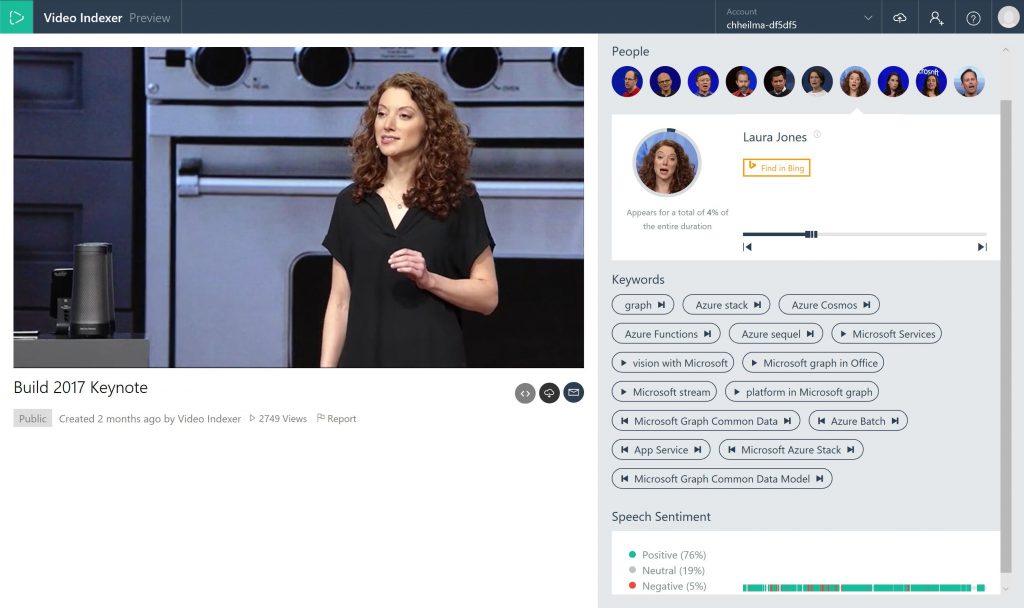

One service that blew me away is VideoIndexer.

(Yes, this is by the company I work for, but it came as a surprise to me that this offer brings together many machine learning APIs in a simple interface.)

Using VideoIndexer, you not only generate an editable time-stamped transcript, but you also get emotion recognition, image to text conversion of video content, speaker recognition and keyword extraction. That way you to offer an interface that allows people to jump where they want to without having to scrub through the video. I’d love to see more offers like these and I am sure there are quite a few out there already in use by big TV companies and sports broadcasters.

Summary

All in all I am grateful to have the opportunity to watch talks of events I couldn’t be at and I’m making an effort to be a better online citizen by providing better descriptions and be more aware of how what I am saying can be consumed as a video afterwards.

My favourite quote in the comments was from Tessa Mero:

Would be fun watching it with someone so we can discuss the content during/after the video. Need social engagement to make learning more fun.

Videos of talks are a great opportunity to learn something and have fun with your colleagues in the office. Pick a room, set up a machine connected to the beamer, get some snacks in, watch the talk and discuss how it applies to your work afterwards. Conference organisers spend a lot of effort to record talks, presenters put a lot in to make the talk exciting and educational. And you can benefit from all of that for free.

Also published on Medium