The new challenges of “open”

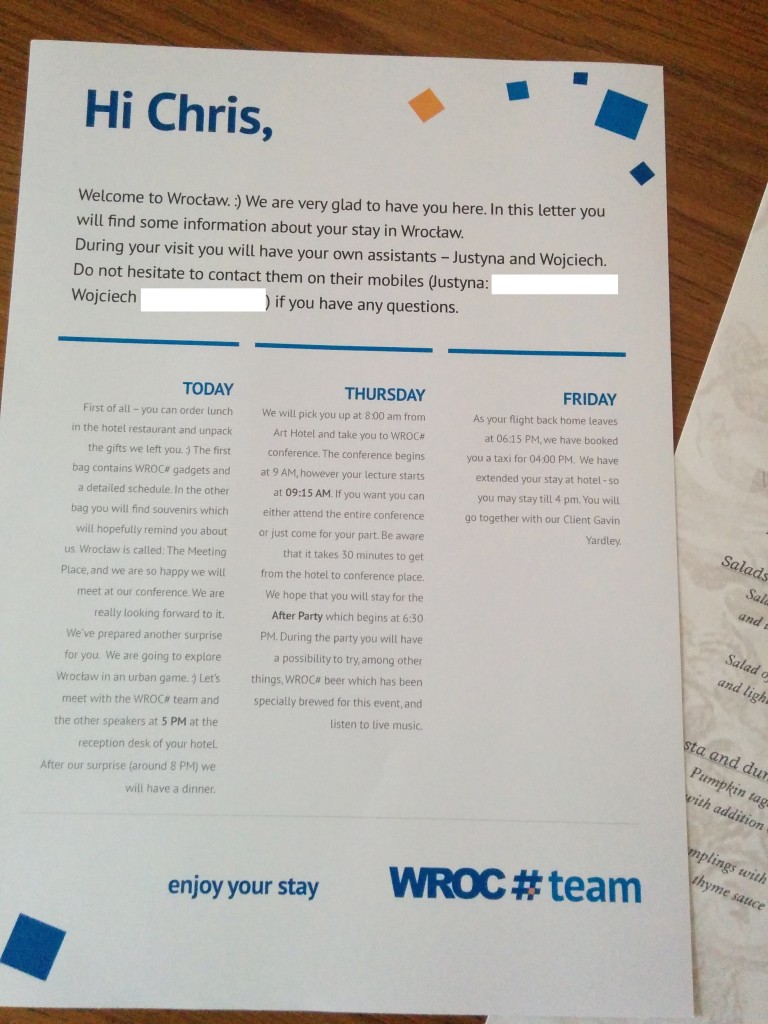

Tuesday, April 28th, 2015These are my notes for my upcoming keynote at he Oscal conference in Tirana, Albania.

Today I want to talk about the new challenges of “open”. Open source, Creative Commons, and many other ideas of the past have become pretty much mainstream these days. It is cool to be open, it makes sense for a lot of companies to go that way. The issue is, that – as with anything fashionable and exciting – people are wont to jump on the bandwagon without playing the right tune. And this is one of the big challenges we’re facing.

Before we go into that, though, let’s recap the effects of going into the open with our work has.

Creating in the open is an empowering and frightening experience. The benefits are pretty obvious:

- You share the load – people can help you with feedback, doing research for you, translating your work, building adapters to other environments for you.

- You have a good chance your work will go on without you – as you shared, others can build upon your work when you moved on to other challenges; or got run over by a bus.

- Sharing feels good – it’s a sort of altruism that doesn’t cost you any money and you see the immediate effect.

- You become a part of something bigger – people will use your work in ways you probably never intended, and never thought of. Seeing this is incredibly exciting.

The downsides of working in the open are based on feedback and human interaction.

- You’re under constant surveillance – you can’t hide things away when you openly share your work in progress. This can be a benefit as it means your product is higher quality when you’re under constant scrutiny. It can, however, also be stifling as you’re more worried about what people think about your work rather than what the work results in.

- You have to allocate your time really well – feedback will come 24/7 and in many cases not in a format that is pleasing or – in some cases – even intelligible.

- You have to pick your battles – people will come with all kind of requests and it is easy to get lost in pleasing the audience instead of finishing your product.

- You have to prepare yourself for having to adhere to existing procedures – years of open source work resulted in many best practices and very outspoken people are quick to demand you adhere to them or stay off the lawn.

Hey cool kids, we’re doing the open thing!

One of the main issues with open is that people are not really aware of the amount of work it is. It is very fashionable to release products as open source. But, in many cases, this means putting the code on GitHub and hoping for a magical audience to help you and fix your problems. This is not how open source prospers.

Open Source and related ways of working does not mean you give out your work for free and leave it at that. It means that you make it available, that you nurture it and that you are open to giving up control for the benefit of the wisdom of the crowd. It is a two-way, three-way, many way exchange of data and information. You give something out, but you also get a lot back, and either deserves the same attention and respect.

More and more I find companies and individuals seeing open sourcing not as a way of working, but as an advertising and hiring exercise. Products get released openly but there is no infrastructure or people in place to deal with the overhead this means. It has become a ribbon to attach to your product – “also available on GitHub”.

We’ve been through that before – the mashup web and open APIs promised us developers that we can build great, scaling and wonderful products by using the power of the web. We pick and mix our content providers with open APIs and build our interfaces on top of that data. This died really quickly and today most APIs we played with are either shut down or became pay-to-play.

Other companies see “open” as a means to keep things alive that are not supported any longer. It’s like the mythical farm the family dog goes to when the kids ask where you take him when he gets old and sick. “Don’t worry, the product doesn’t mesh with the core business of our company any longer, but it will live on as it is now open source” is the message. And it is pretty much a useless one. We need products that are supported, maintaned and used. Simply giving stuff out for free doesn’t mean this will happen to that product, as it means a lot of work for the maintainers. In many cases shutting a product down is the more honest thing to do.

If you want to be open about it – do it our way

The other issue with open is that – ironically – open communities can come across as uninviting and aggressive. We are a passionate bunch, and care a lot about what we do. That can make us appear overly defensive and aggressive. Many long-standing open communities have methodologies in place to ensure quality that on first look can be daunting and off-putting.

Many companies understand the value of open, but are hesitant to open up their products because of this. The open community can come across as very demanding. And it is very easy to get an avalanche of bad feedback when you release something into the open but you fail to tick all the boxes. This is poison to anyone in a large company trying to release something closed into the open. You have to justify your work to the business people in the company. And if all you have to show is an avalanche of bad feedback and passive-aggressive “oh look, evilcorp is trying to play nice” comments, they are not going to be pleased with you.

We’re not competing with technology – we’re competing with marketing and tech propaganda

The biggest issue I see with open is that it has become a tool. Many of the closed environments that are in essence a replacement for the open web are powered by open technologies. This is what they are great for. The plumbing of the web runs on open. We’re a useful cog, and – to be fair – a lot of closed companies also support and give back to these products.

On the other hand, when you talk about a fully open product and try to bring it to end users, you are facing an uphill battle. Almost every open alternative to closed (or partly open systems) struggles or – if we are honest with ourselves – failed. Firefox OS is not taking the world by storm and brings connectivity to people who badly need it. The Ubuntu phone as an alternative didn’t cause a massive stir. Ello and Diaspora didn’t make a dent in the Facebooks and Twitters of this world. The Ouya game console ran into debt very quickly and now is looking to be bought out.

The reason is that we’re fooling ourselves when it comes to the current market and how it uses technology.

Longevity is dead

We love technology. We love the web. We love how it made us who we are and we celebrate the fights we fought to keep it open. We fight for freedom of choice, we fight for data retention and ownership of data and we worry where our data goes, if it will be available in the future or what happens with it.

But we are not our audience. Our audience are the digital natives. The people who see a computer, a smartphone and the internet as a given. The people who don’t even know what it means to be offline, and who watch streaming TV shows in bulk without a sense of dread at how much this costs or if it will work. If it stops working, who cares? Let’s do something else. If our phones or computers are broken, well let’s replace them. Or go to the shop and get them repaired for us. If the phone is too slow for the next version of its operating system, fine. Obviously we need to buy a better one.

The internet and technology has become a commodity, like running water and electricity. Of course, this is not the case all over the world, and in many cases also not when you’re traveling outside the country of your contracts. But, to those who never experienced this, it is nothing to worry about. Bit by bit, the web has become the new TV. Something people consume without knowing how it works or really taking part in it.

In England, where I live, it is almost impossible to get an internet connection without some digital TV deal as part of the package. The internet access is the thing we use to consume content provided to us by the same people who sold us CDs, DVDs, and BluRays. And those who consume over the internet also fill it up with content taken from this source material. Real creativity on the web, writing and publishing is on the way out. When something is always available, you stop caring for it. It is simply a given.

Closed by design, consumable by nature

This really scares me. It means that the people who always fought the open web and the free nature of software have won. Not by better solutions or by more choice. But by offering convenience. We’ve allowed companies with better marketing than us to take over and tell people that by staying in their world, everything is easy and works magically. People trade freedom of choice and ownership of their information for convenience. And that is hard to beat. When everything works, why put effort in?

The dawn of this was the format of the app. It was a genius idea to make software a consumable, perishable product. We moved away from desktop apps to web based apps a long time ago. Email, Calendaring, even document handling has gone that way and Google showed how that can be done.

With the smartphone revolution and the lack of support for open technologies in the leading platform the app was re-born: a bespoke piece of software written for a single platform in a closed format that needs OS-specific skills and tools to create. For end users, it is an icon. It works well, it looks amazing and it ties in perfectly with the OS. Which is no surprise, as it is written exclusively for it.

Consumable, perishable products are easier to sell. That’s why the market latched on to this quickly and defined it as the new, modern way to create software.

Even worse, instead of pointing out the lack of support for interoperable and standardised technology in the operating systems of smart devices, the tech press blamed said technologies for not working on them as well as the native counterparts do.

Develop where the people are

This constant re-inforcement of closed as a good business and open as not ready and hard to do has become a thing in the development world. Most products these days are not created for the web, independent of OS or platform. The first port of call is iOS, and once its been a success there, maybe Android. But only after complaining that the fragmentation is making it impossible to work. Fragmentation that has always been a given in the open world.

A fool’s errand

It seems open has lost. It has, to a degree. But there are already signs that what’s happening is not going to last. People are getting tired of apps and being constantly reminded by them to do things for them. People are getting bored of putting content in a system that doesn’t keep them excited and jump from product to product almost monthly. The big move of almost every platform towards light-weight messaging systems instead of life streams shows that there is a desperate attempt to keep people interested.

The big market people aim for is teenagers. They have a lot of time, they create a lot of interactions and they have their parent’s money to spend if they nag long enough.

The fallacy here is that many companies think that the teenagers of now will be the users of their products in the future. When I remember what I was like as a teenager, there is a small chance that this will happen.

We’re in a bubble and it is pretty much ready to burst. When the dust settles and people start wondering how anyone could be foolish enough to spend billions on dollars on companies that promise profits and pivot every few months when it didn’t come we’ll still be there. Much like we were during the first dotcom boom.

We’re here to help!

And this is what I want to close with. It looks dire for the open web and for open technologies right now. Yes, a lot is happening, but a lot is lip-service and many of the “open solutions” are trojan horses trying to lock people into a certain service infrastructure.

And this is where I need you. The open source and open in general enthusiasts. Our job now is to show that what we do works. That what we do matters. And that what we do will not only deliver now, but also in the future.

We do this by being open. By helping people to move from closed to open. Let’s be a guiding mentor, let’s push gently instead of getting up in arms when something is not 100% open. Let’s show that open means that you build for the users and the creators of now and of tomorrow – regardless of what is fashionable or shiny.

We have to move with the evolution of computing much like anybody else. And we do it by merging with the closed, not by trying to replace it. This failed and will also in the future. We’re not here to create consumables. We’re here to make sure they are made from great, sustainable and healthy parts.