Web Truths: The web is world-wide and needs to be more inclusive

Wednesday, December 13th, 2017This is part of the web truths series of posts. A series where we look at true sounding statements that we keep using to have endless discussions instead of moving on. Today I want to talk about the notion of the web as being a world-wide publishing platform and having to support all environments no matter how basic.

The web is world-wide and needs to be more inclusive

Well, yes, sure, the world-wide-web is world-wide and anyone can be part of it. Hosting is not hard to find and creating a web site is pretty straight-forward, too.

Web content needs to be as inclusive as possible, that’s common sense. If we enable more people to access our content, there is a higher chance we create something that is worth while.

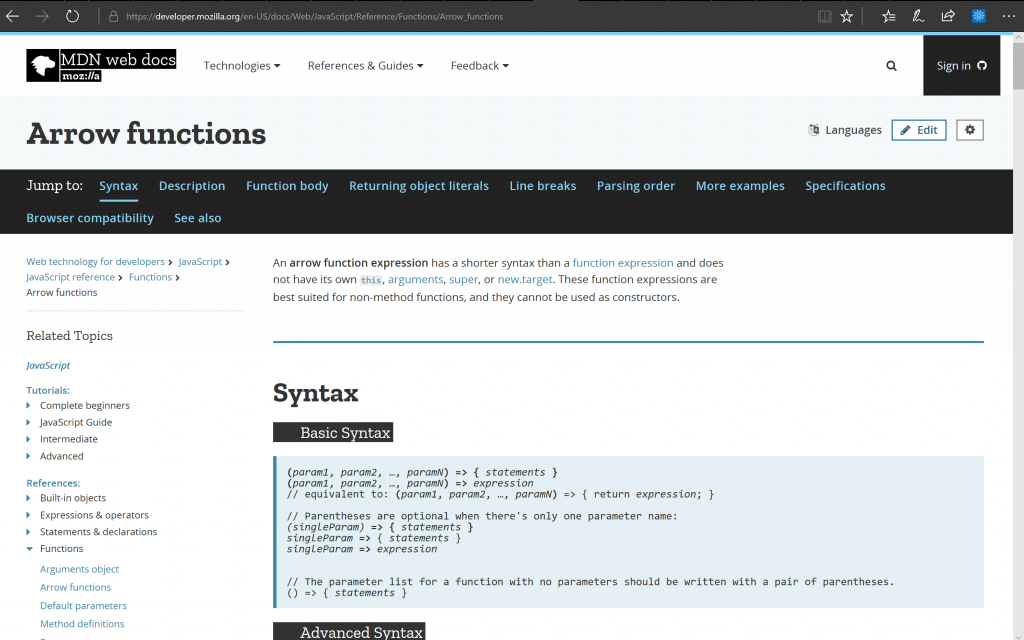

There is no question at all that web content should not exclude people because of their ability. As web content used to be text as a starting point this wasn’t extra effort. Alternative text for images, descriptions for complex visuals and transcripts for videos. These, together with assistive technology and translation services allowed for access. I won’t talk about accessibility here. This is about availability.

For those who started early on the web these are basic principles you need to follow when you want to play on the web. Which is why we always cry foul when someone violates them.

- When someone creates a product that only works in a certain market

- When something is only in one language or assumes people know how to work around that problem

- When a product assumes a certain setup, operating system or browser

- When a product relies on new technology without a fallback option for older browsers

These are all valid points and in a perfect world, they’d be a problem for a publisher not to follow. We don’t live in a perfect world though, and the sad fact is that the web we defend with these ideas never made it. It died much earlier when we forgot a basic principle of publication: how do you make money with it.

If all you want is to publish something on the web, and your reward is that it will be available for people you’re set. This attitude is either fierce altruism or stems from a position where you can afford to be generous.

For many, this isn’t what the web is about. Very early on the web was sold as a gold-mine. You create a web site and customers will come. Customers ready to pay for your services. Your web site is a 24/7 storefront that doesn’t have any overhead like a call centre would.

Amongst other problems, this marketing message lead to the mess that the web has become. It isn’t about making your content available. It is about reaching people ready to pay for your product or at least cover your costs. Those aren’t hypothetical users all over the world. For most companies that rely on making money these are affluent audiences where the company itself is. There are, of course, exceptions to this and some companies like the NYC based dating site Ignighter survived by realising their audience is coming from somewhere else. But these are scarce, and the safe bet or fast fail for most publishers was to reach people where they are.

You can’t make money if you spend too much, and thus you cut corners. Sure, it sounds interesting to have your product available world-wide. But it is more cost-efficient to stick to the markets you know and can bill people in. That’s why the world-wide-web we wanted to have is in essence a collection of smaller, local webs.

I’m moving from England to Germany. I’m amazed how many people use local web products instead of those I use. Some are because of their offers – the Netflix catalogue for example is much larger in the UK. But many are a success because of classic advertising on TV, in trains and newspapers.

As competiton grew it wasn’t about creating valuable content and maintaining it any longer. It was about adding what you offer and find shortcuts to get yourself found instead of your competition. And thus search engine optimisation came about and got funding and effort.

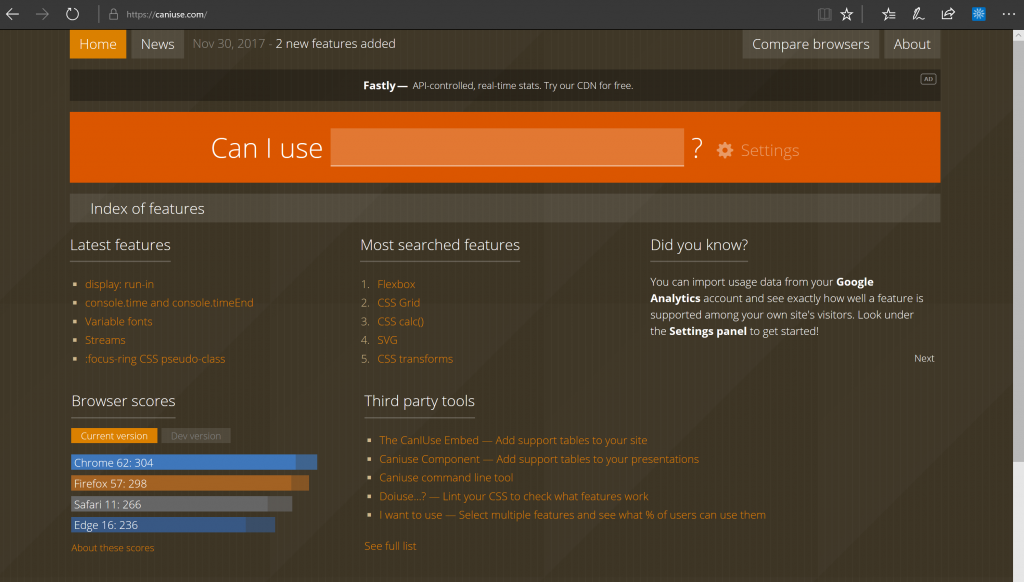

Of course it makes sense to have your product available in one form or another on all browsers and on enterprise and legacy environments. But is it worth the extra effort? It is when you start with your aim being publishing content. But many web products now don’t start with content, they start as a platform and get products added in a CMS. And that’s when browser market share is the measure, not supporting everybody. A browser that works well on Desktop and is also available on the high-end smartphones is what people optimise for. Because that’s what every sales person tells you is the target audience and they can show fancy numbers to prove that. That this seems short-sighted is understandable for us, but we also need to prove that it really is. In a measureable way with real numbers of how much people lost betting on one environment in the short term.

The sad fact we have to face now is that the web is not the main focus of people who want to offer content online any longer. Which seems strange, as apps are much harder to create and you are at the mercy of the store publishers.

Apple just announced that next year they disallow templated and generated apps. This is a blow to a lot of smaller publishers who bet on iOS as the main channel to paying customers. No worry, though, they can easily create a web site and turn it into a PWA, right?

Well, maybe. But there is an interesting statistic in the Apple article:

According to 2017 data from Flurry, mobile browser usage dropped from 20 percent in 2013 to just 8 percent in 2016, with the rest of our time spent in apps, for example. They are our doorway to the web and the way we interact with services.

Of course, one stat can be debated, and the time we spend in apps doesn’t mean we also buy things in them or click on ads. A good web site may be used for 30 seconds and leave me a happy customer. However, new users are more likely to look for an app than enter or click a URL. The reason is that apps are advertised as more reliable and focused whereas the web is a mess.

On the surface for Apple, this isn’t about punishing smaller publishers. This is a move to clean up their store. A lot of these kind of apps are either low quality or meant to look like successful ones. Reminds us of the time when SEO efforts did exactly the same thing. Companies created dozens of sites that looked great. They had custom made content to attract Google to create inbound links to the site they got paid to promote.

The sad truth about the web and the fact that publishing is easy is that the cleaning step never happens. The best content hasn’t won on the web for years now. The most promoted, the one using the dirtiest tricks whilst skirting Google’s TOCs did.

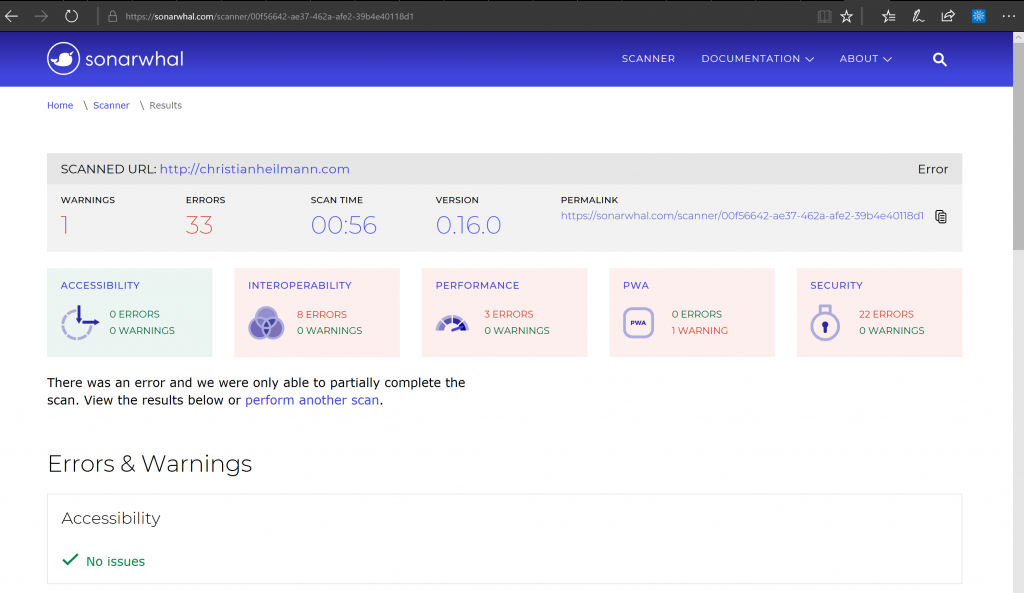

A lot of what is web content now is too heavy and too demanding for people coming to the web. People on mobile devices with very limited data traffic at their disposal.

That’s no problem though, right? We can slim down the web and make it available to these new markets. There is no shortage of talks at the moment to reach the next billion users. By doing less, by using Service Workers to create offline ready functionality. By concentrating on what browsers can do for us instead of simulating it with frameworks.

Maybe this is the solution. But there is a problem that often we come from a position of privilege that is much further from the truth than we’d like to. Natalie Pistunovich has a great talk called Developing for the next Billion where she talks about her experiences releasing a product in Kenya, one of the big growth markets.

There is a lot of interesting information in there and some hard to swallow truths. One of them is that smartphones are not as available as we think they are – even low end Androids. And the second is that even when they are that people aren’t having reliable data connections. Instead of going to web sites people send apps to each other using Bluetooth. Or they offer and sell products on WhatsApp groups – as these tend to be exempt of the monthly data allowance. With Net Neutrality under attack in the US right now, this could be the same for us soon, too.

Of course these are extreme conditions. But the fact remains that closed systems like WhatsApp allow these people to sell online where the web failed. Not because of its nature and technologies, which are capable enough for that. But because of what it became over the years.

The web may be world-wide as a design idea, but the realities of connectivity and availability of web-ready hardware are a different story. Much like anything else, a few have both in abundance and squander it whilst others who could benefit the most don’t even know how to start.

That’s why the “reaching the next million users with bleeding edge web technology” messages ring hollow. And it doesn’t help to tell us over and over again that it could be different. We have to make it happen.