An open proposal to OpenAI, Bard, Amazon, et al…: GPTeachers

Tuesday, May 9th, 2023 at 5:13 pmFirst of all, congratulations. You managed to kick off the next step of computer and human interaction evolution. Of course we’re in the middle of a hype, but there is so much good in AI generated content and conversational interfaces, it’s pretty safe to say all things “computer” will have some part of this included going forward.

You have an issue though: relevance. Or, as some call it, hallucinations. Systems fed with lots of data that wasn’t quite quality controlled that manage to make even erroneous data sound like the best thing since sliced bread could turn out to be disastrous in the long run. Just because something sounds clever, doesn’t make it good.

Here’s the bad news: the wisdom of the masses will not save you. As someone who worked on products with millions of users and highly visible interfaces like browsers I can tell with great confidence that people will not downvote and report wrong results.

The idea of increasing the weight of results that were the end of a conversation is also flawed. It’s like asking your obviously miffed partner what’s wrong and they answer “nothing”. This is not the end of that conversation.

Dissatisfied users do not put an extra effort in to downvote the wrong result – they just leave as they feel that they already wasted enough time with your product.

How to make that better? Well, one way is to give your systems context and limit it to that one. GitHub Copilot for docs is a great example of that.

The other, and here is where I come in with my pitch, is to consider editors, writers and tech experts to flood your product with great solutions, downvote obviously wrong ones and annotate others to explain that whilst this is a solution, it may come with a caveat.

The great opportunity you have right now is that the market is saturated with laid off people who have some time on their hand. These are experts in certain fields: programmers, designers, product managers and many others. Ironically, you will also find a lot of technical writers to work with right now as they have been laid off as part of an overreaction to your success. Who needs authors when the machine can generate lots of text for free (or pennies in traffic)?

Of course, creating a community of experts to talk to your machines takes time and effort. Guess what? A lot of community managers were also laid off when companies closed their tech communities.

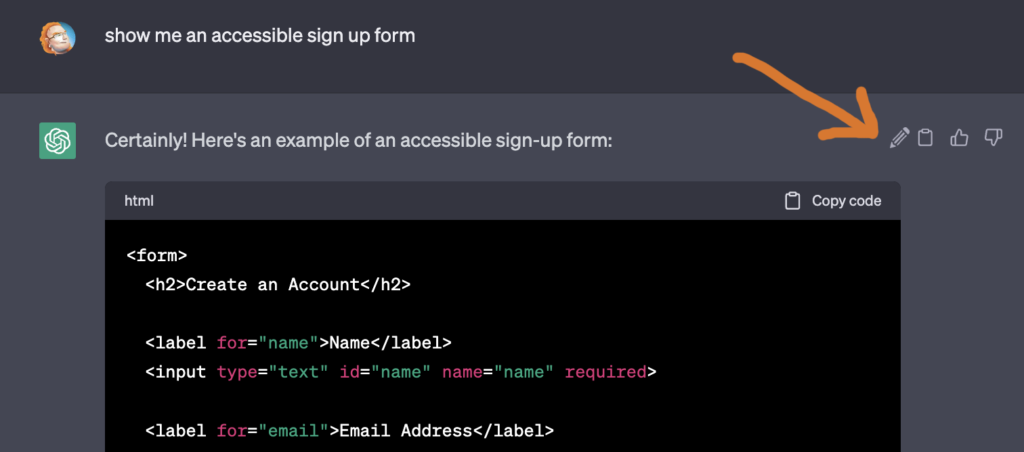

As an expert editor or “GPTeacher” I could get in addition to the upvote, downvote and copy icon also one that allows me to edit the response. This would go into a review process with two others and then back into the system.

As a developer known to you as a “GPTeacher” I could write code examples on GitHub and tag them with a special tag #gptfodder (or, whatever), and again, after a peer review with others it’ll get more weight to be shown in a higher frequency in conversations people have with your systems.

I know, all of this feels a bit old school and Wikipedia-ish. But, it worked in the past and it scales much better than hiring a lot of people in outsourcing markets to clean up things that are obviously wrong.

We are at a crucial point here. Some governments already work on laws to block your systems as there is no attribution or licence information in your results. It would be a shame if we let machines educate people the wrong way because we were not interested in giving the system human supervision when it was needed.

Want to know more? Let’s start a conversation and build a community of enthusiastic experts that are OK to teach the machine to do better rather than hoping people will be able to see the flaws in the conveniently offered, clever sounding solution.