Ink-trap development

Thursday, March 1st, 2018 at 3:20 pmSometimes you see things that look a bit off and it makes you feel uneasy. When something challenges your sense of quality and what appears well-made. Like the following font:

This doesn’t look good, and it doesn’t look right. It feels like someone didn’t quite finish it or didn’t know how to use tools. Or – even worse – didn’t use the right tools for the job. This font, for example, looks like my first attempts to get my head around vectors in Photoshop. Not a memory I cherish.

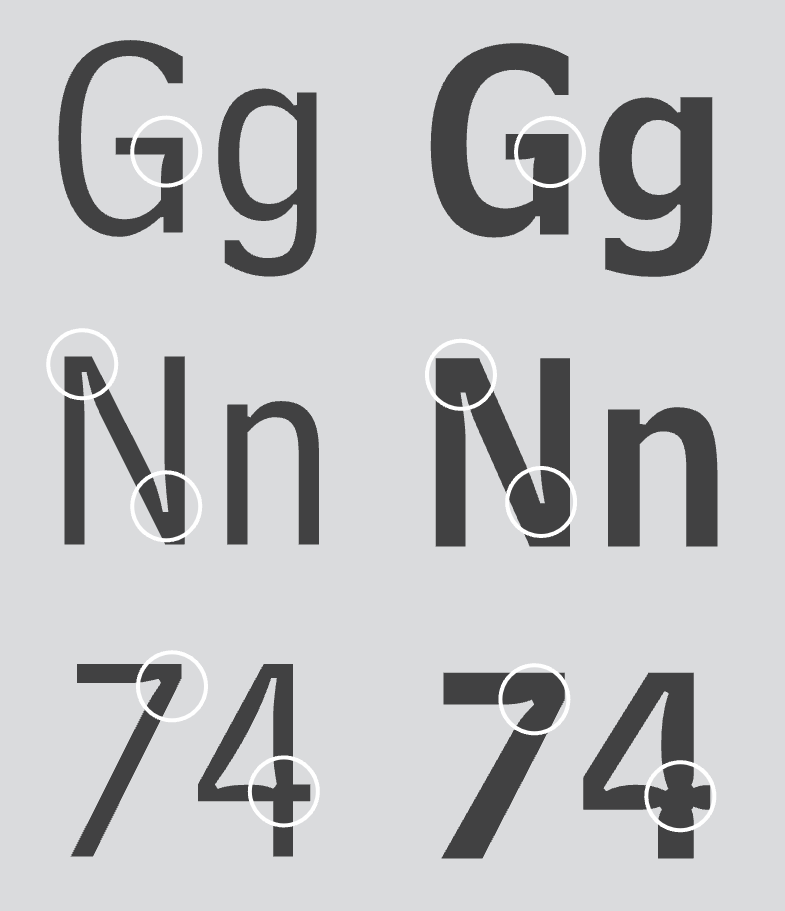

In the case of this font, though, nothing of this applies. What you see here is Bell Centennial by Matthew Carter, a well-regarded font expert. It is a font that has seen a massive amount of use.

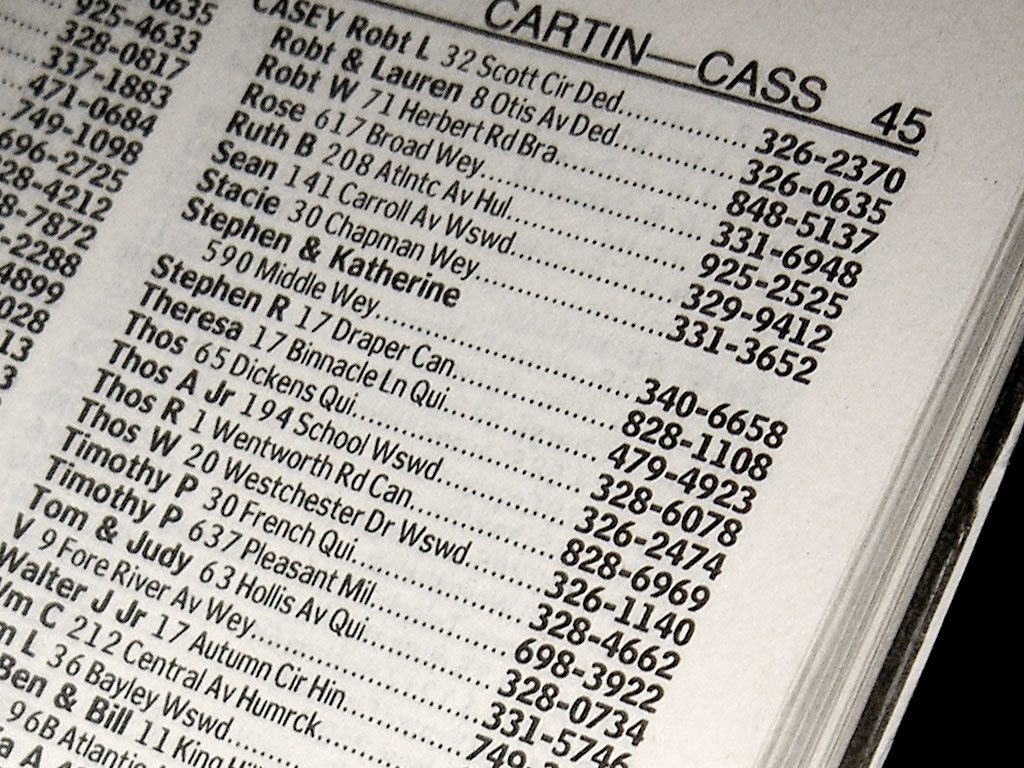

You may not recall ever seeing it, but here’s the deal: Bell Centennial was the font used in AT&T phonebooks. The weird missing parts of the letters in it are so called “Ink Traps”. The genius of the ink trap is that it makes the font work when applied in its natural environment.

Phone books shouldn’t cost much to print, which is why they use cheap paper. They also are big and should use small fonts, allowing for more content in fewer pages. In essence, the implementation phase of the project phone book tried to cut corners. Staying as cheap as possible.

This is where the ink traps come in. Ink bleeds out on cheap paper and blurs the letters. A font with no spaces can thus become unreadable. Not so Bell Centennial. On the contrary – it gets better and more legible when printed. The ink traps fill with ink and make the weirdness of the font go away.

I like this approach a lot. There is a lot of merit in creating something and assuming a flawed implementation. As they all are in one way or another. By leaving things out to become available later – or not at all – we can concentrate on what’s important. A solid base that may not be perfect, but will not expect the implementer to do everything right, either.

Because they never do. In twenty years in this industry I’ve never seen a project get out the door the way we planned it to. It was even more common to see projects getting cancelled halfway through. And not only waterfall projects. Even the safeguard of the Agile Manifesto keeps failing.

In-build failure obscured just enough to make money

When I worked for an agency in the B2B space this seems to be even a common theme. Get the project, make sure you have a nice round sum in the contract that you have to pay in case you fail. Then pad the project with lots of unnecessary but expensive heads in the early stages. Bill all those to the client. Mess up and pay the fine when everything goes pear-shaped, leaving you with a nice profit.

We want to build for the future…

As a technical person, these projects were frustrating to work on. We’re lazy. We don’t want to do the same things over and over again. We want to do something and then automate it. We are all about making sure what we do is maintainable, extensible and follows standards. Because that makes outcomes predictable. For years we’ve been preaching that our code should be cleaner. It shouldn’t make assumptions. It should allow for erroneous data coming in and trying to fix it. It should be generic, not specific. We preach all this to avoid unpleasant surprises in the future.

Future imperfect

And yet, I have a hard time remembering when projects that we put so much effort in ever became a reality. They got out, yes, but somewhere along the way someone cut corners. And what is now live was never something I was particularly proud of. Other projects, that appeared rushed and made me feel dirty got out and flourish. Often they also vanished soon after, and oddly enough, the sky didn’t fall on our heads. Nobody missed them. They’ve done their job and overstayed their welcome. It was wasted effort to make them scale to forever and try to predict all the future needs. As there was no future for them. It is easier to fold and restart a software project than to dismantle and change a physical product. That’s the beauty of software. That’s what makes it soft.

I’ve hardly ever seen rewards of the hard work of making products maintainable in a clean manner. I am not saying they don’t make sense. I am saying that far too often, something got in the way. Something I had no control over. Often things I didn’t even hear about until things went pear-shaped:

- Our well-thought-out design system hardly ever evolved with the product. Instead redesigns would wipe them out completely. Or the product would move to another CMS or backend. Out of a sudden this brought new limitations incompatible with the original design.

- Our clean, accessible and semantic templates have a similar fate. Tossed around a few cycles of maintenance someone will have removed or warped the goodness we added upfront. Audits will flag this up, but with no immediate repercussions the invalid bits will end up on the backlog. There’s new things to add, after all.

New toys with new battery requirements

A common scenario is new product owners trying to make their mark by bringing the new tech hotness. Hotness they don’t understand and don’t hire able people for. Instead, it means starting new and following the “best practices” of the new hotness. What works for Facebook will also scale and apply to this internal payroll system, right?

Is failure a given? Maybe…

I’m sorry if this sounds bleak, but this seems to be a good time to re-consider what we are doing. We had a few decades of computers turning from a tool for scientists to them becoming an integral part of life. And this means we need to level up our game. This is not a cool tech thing to play with. This is a integral part of life. People’s identities, freedom, security, safety and happiness are dependent on it.

Broken toys

The quality of what we rely on isn’t that encouraging. If anything, a lot of the sins of the past now come to light – even on a processor level. We built a lot of spur-of-the-moment, good-enough things and hoped nobody looks closer. Well, the bad players of the market seem to be the ones looking and taking advantage.

When in the past we hid odd code in obscure byte code we now create huge dependency chains and lots of components. Components easy to re-use. Components not under the scrutiny of any quality assurance or security audits. Instead we rely on the wisdom of the masses and the open source community.

This would work, if the OSS world hadn’t exploded in the recent years. And if we didn’t tell newcomers to “not worry” and “just use what is there” to be more effective. We’re chasing our tail trying to be more effective and creating a lot with not much code. We move further and further away from the implementers and maintainers of our code. That’s what abstraction offers. Nobody likes maintenance. But it is probably the most important part there is. Any non-maintained project is a potential attack vector.

We forget that someone, somewhere needs to ensure that what we do doesn’t become a security nightmare. Instead, we create tools that check existing solutions for problems after the fact. This is a good measure, but it kind of feels self-serving. It also assumes that companies care to use these tools. And how they use it.

Case study: when the law required accessibility

I remember accessibility going through the same motions. When being accessible to people with impaired abilities became a legal need, people started to care. They didn’t care enough to look into their process of creating accessible products, as in – start with a sensible baseline. They didn’t want to understand the needs. They wanted to know how not to get sued. They bought a tool to check what’s obviously broken. They displayed a “Bobby Approved” or WCAG-AAA compliant banner on their web sites. They achieved that by adding alternative text like “image” to images. Satisfying the testing tool instead of creating benefit to visually impaired users.

This enraged the purists (including me) as it showed that people didn’t understand why we wanted things to be accessible. So we ranted and shone bright lights at their mistakes to shame people into doing the right thing. The net effect was that people stopped reaching out to experts when it came to accessibility. Instead they hired third party vendors to care on their behalf and build the thing needed to not get sued.

A lack of shared responsibility

It makes you wonder if we are trying too hard. If the current messy state of affairs in the whole of IT is based on a lack of shared responsibilities. Communication doesn’t help if it only states “don’t worry, it works”. An “of course our product will scale with you” is worrying without any shared ownership of the “how”. There is a disconnect between what we build and who maintains and uses it that leaves everyone involved disappointed.

This is tough to swallow and does rattle a few basic pillars of how we make money in the IT world. We build products and frameworks that enable people to do their jobs. We don’t want them to mess with the products though, so they become very generic. Generic solutions are less likely to give an optimised experience. They also are less likely to result in performant solutions catered to the current need. We throw in the kitchen sink and the plumbing and wonder why people run out of space. And we get angry when they start fiddling with the wrong pipes or don’t fix those that leak as long as the water runs.

Many products are terrible, but everyone involved didn’t do anything wrong

We’re quick to complain about users not doing things right. But we also never cared much about how people use our products. We hand this task over to the UX people, the trainers, the help desk and the maintenance staff. It is easy to complain about systems we have to use and wonder how on earth these things happened. The reason is simple: we created generic solutions and tweaked the output. We didn’t remove any extra features, but made them options to turn on and off instead. Many of our solutions are like a car with five steering wheels in different sizes. We expect the drivers to pick the right one and get cross when they’re confused.

Ink-trap development?

Maybe this is a good time to reflect and consider an approach like Matthew Carter did. We know that things will go wrong and that the end product is not perfect. So we optimise for that scenario instead of offering a huge, generic solution and hope people only use what they need. Maybe coding isn’t for a small, elite group to deliver interfaces to people. Maybe we need to have more involvement all the way from design to use of our products and we will create what is needed. This means that a lot more development would be in-house rather than buying or using generic solutions. Sure, this will be much more boring for us. But maybe not trying to predict all the use cases people might use our products for will give us more time to have a life outside of our work.

Working in IT isn’t an odditiy any longer, it is one of the few growing job markets. Maybe this means we have to dial back our ambitions to change the world by building things people don’t have to understand. Instead, we could try to build products based on communication upwards and sideways. And not those that only get feedback from user testing sessions once in a blue moon. People are messy. Software won’t change that. But it could give people ways to mess up without opening a whole can of worms by sticking to features people really need and use. We can always expand later, can’t we?