Helping or hurting? (keynote talk at jQuery Europe)

Wednesday, February 20th, 2013 at 7:19 pmThis was my closing keynote talk of the first day of jQuery Europe 2013 in Vienna, Austria. The slide deck is here. Sadly, due to technical issues there is no audio or video recording.

As developers, we seem to be hardwired to try to fix things. And once we managed something, we get bored with it and want to automate it so that in the future we don’t have to think about it any longer. We are already pre-occupied with solving the next puzzle. We delude ourselves into thinking that everything can get a process that makes it easier. After all, this is what programming was meant for – doing boring repetitive tasks for us so we can use our brains for things that need more lateral thinking and analysis.

Going back in time

When I started as a web developer, there was no such thing as CSS and JavaScript’s interaction with the browser was a total mess. Yet we were asked to build web sites that look exactly like the Photoshop files our designers sent us. That’s why in order to display a site correctly we used tables for layout:

<table width="1" border="1" cellpadding="1" cellspacing="1"> <tr> <td width="1"><img src="dot_clear.gif" width="1" height="1" alt=""></td> <td width="100"><img src="dot_clear.gif" width="100" height="1" alt=""></td> </tr> <tr> <td align="center" valign="middle" bgcolor="green"> <font face="arial" size="2" color="black"> <div class="text"> 1_1 </div> </font> </td> <td align="center" valign="middle" bgcolor="green"> <font face="arial" size="2" color="black" > <div class="text"> 1_2 </div> </font> </td> </tr> </table> |

Everything in there was needed to render properly across different browsers. Including the repeated width definitions in the cells and the filler GIF images. The DIVs with class “text” inside the font elements were needed as Netscape 4 didn’t apply any styling without an own element and class inside a FONT element.

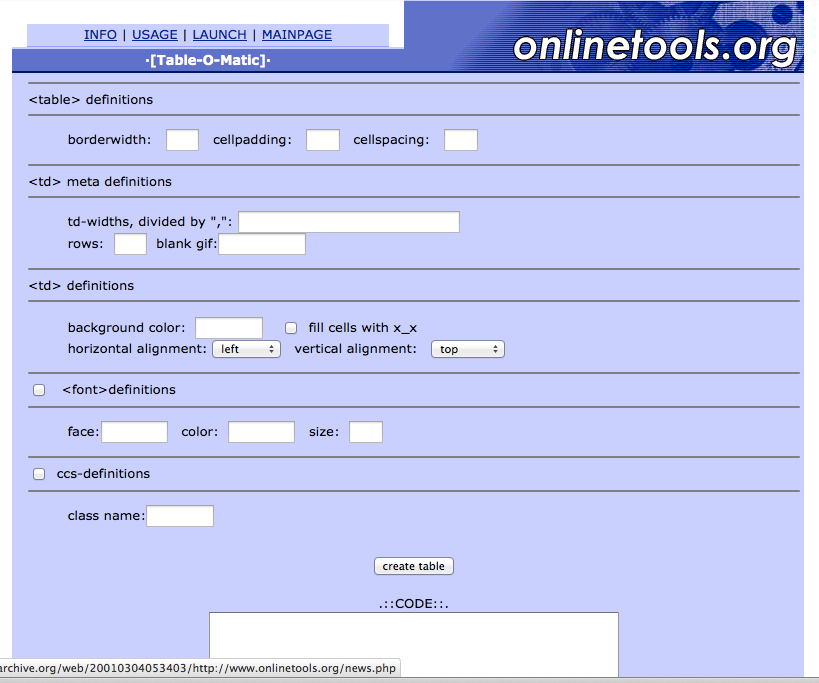

Horrible hacks, but once we tested and discovered the tricks that are needed we made sure we didn’t have to do it every time from scratch. Instead we used snippets in our editors (Homesite in my case back then) and created generators. My “table-o-matic” is still available on the Wayback machine:

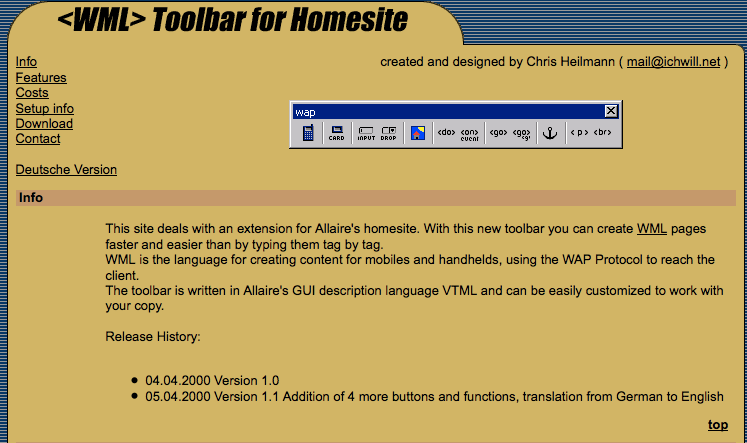

Moving into hard-core production we shifted these generators and tricks into the development environment. Instead of having a web site, I created toolbars for Homesite for different repetitive tasks we had to do. I couldn’t find the original table toolbar any longer but I found the WAP-o-matic, which was the same thing to create the outline of a document for the – then – technology of the future called WML:

Etoys, the company I worked for back then was hard-core as they come. Our site was an ecommerce site that had millions of busy users on awful connections. Omitting quotes on our HTML was not a matter of being hipster cool about HTML5, it was a necessity to make things work on a 56KB dial-up when browsers didn’t have any gzip deflating built-in.

Using the table tools it was great to build a massively complex page and see it working the same across all the browsers of the day and the older ones still in use (think AOL users).

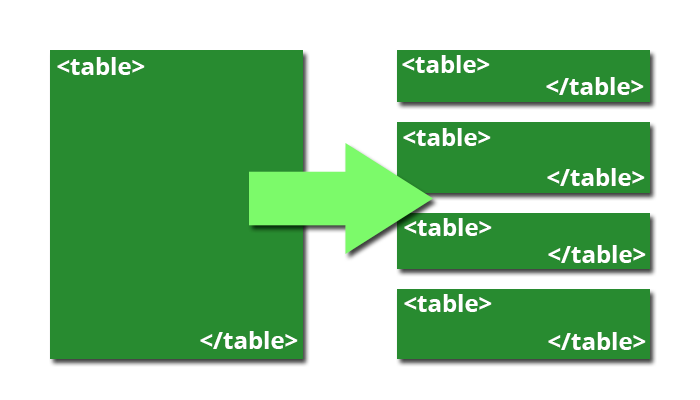

But there was an issue. It turned out that browsers didn’t render the whole page until all the document was loaded when the content was a table. Netscape also didn’t render the table at all when there was a syntax error in your HTML. In order to speed up rendering you had to cut up your page into several tables, which would render one after another. With a defined header in our company it was possible to still automate that both in the editor and in templates. But it limited us. We had a race between progressive rendering of the page and the amount of HTML we sent to the browser. Add to this that caching was much more hit and miss back then and you are in a tough spot.

The rise of search engines was the next stumbling block for table layouts. Instead of having lots of HTML that defines the main navigation and header first-up (remember, source order defined visual display) it became more and more important to get the text content of the page higher up in the source. The trick was to use a colspan of 2 on the main content and add an empty cell first.

Helper tools need to evolve with market needs

The fact remains that back then this was both state of the art and it made our lives much, much easier to automate the hacks once we knew them. The problem though is that when you deliver tools like these to developers, you need to keep up with changes in the requirements, too. Company internal tools suffer less from that problem as they are crucial to delivery. But tools we threw out for fun and help other developers are more likely to get stale once we move on to solve other problems.

No browser in use these days needs table layouts and they are far too rigid to deliver to the different environment we want to deliver HTML in. Luckily enough, I don’t know anyone who uses these things any longer and whilst they were amazingly successful at the time, I cut the cord and made them not available any longer. No sense in tempting people to do pointless things that seem the most simple thing to do.

When we look at the generated code now it makes us feel uneasy. At least it should – if it doesn’t, you’ve been working in enterprise too long. There was some backlash when I deleted my tools because once engineers like something and find it useful we love to defend tooth and nail:

Arguing with an engineer is a lot like wrestling in the mud with a pig. After a few hours, you realize that he likes it.

What I found over and over again in my career as a developer is that our tools are there to make our jobs easier, but they also have to continuously evolve with the market. If they don’t, then we stifle the market’s evolution as developers will stick to outdated techniques as change means having to put effort in.

Never knowing the why stops us from learning

Even worse, tools that make things much easier for developers can ensure that new developers coming into the market have no clue what they are doing. They just use what the experts use and what makes their lives easier. In software that works as there is no physical part that could fail. You can’t be as good as a star athlete by buying the same pair of shoes. But you can use a few plugins, scripts and libraries and put together something very impressive.

This is good, and I am sure it helped the web become the mainstream it is now. We made it much easier to build things for the web. But the price we paid is that we have a work-force that is dependent on tools and libraries and forgot about the basics. For every job offer for “JavaScript developer” you’ll get ten applicants who only ever used libraries and fail to write a simple loop using only what browsers give you.

Sure, there is a reason for that: legacy browsers. It can appear as wasted time explaining event handling to someone who just learns JavaScript and having to tell them the standard way and then how old Internet Explorer does it. It seems boring and repetitive. We lived with that pain long enough not to want to pass it on to the next generation of developers.

The solution though is not to abstract this pain away. We are in a world that changes at an incredible pace and the current and future technologies don’t allow us to carry the burden of abstraction with us forever. We need the next generation of developers to face new challenges and we can’t do that when we tell them that everything works if you use technology X, script Y or process Z. Software breaks, environments are never as good as your local machine and the experiences of our end users are always different to what we have.

A constant stream of perfect solutions?

Right now we are obsessed with building helper tools. Libraries, preprocessors, build scripts, automated packaging scripts. All these things exist and there is a new one each week. This is how software works, this is what we learn in university and this is how big systems are built. And everything we do on the web is now there to scale. If your web site doesn’t use the same things Google, Facebook, Twitter and others use to scale, you will fail when the millions of users will come. Will they come for all of us? Not likely, and if they do it will also mean changing your infrastructure.

What we do with this is add more and more complexity. Of course these tools help us and allow us to build things very quickly that easily scale to infinity and beyond. But how easy is it for people to learn these skills? How reliable are these tools not to bite us in a month’s time? How much do they really save us?

And here comes the kicker: how much damage do they do to the evolution of browsers and the competitiveness of the web as a platform?

Want to build to last? Use standards!

The code I wrote 13 years ago is terrible by today’s idea of semantic and valuable HTML. And yet, it works. Even more interesting – although eToys has been bankrupt and closed in the UK for 12 years, archive.org still has a large chunk of it for me to find to show you now as it is standards based web content with HTML and working URLs.

In five years from now, how much of what we do right now will still be archived or working? Right now I get Flashbacks to DHTML days, where we forked code for IE and Netscape and tried to tell users what browser to use as this is the future.

There seems to be a general belief in the developer community that WebKit is the best engine and seeing how many browsers use it that others are not needed any longer. This, to a degree is the doing of abstraction as no browser engine is perfect

jQuery Core has more lines of fixes and patches for WebKit than any other browser. In general these are not recent regressions, but long-standing problems that have yet to be addressed.

It’s starting to feel like oldIE all over again, but with a different set of excuses for why nothing can be fixed. Dave Methvin, jQuery core team; President of the jQuery Foundation.

We repeat the mistakes of DHTML days. Many web sites expect a Webkit browser or JavaScript to be flawlessly executed. I don’t really have a problem with JavaScript dependency for some functionality (as the “JavaScript turned off” use case is very much a niche phenomenon) but we rely far too much on it for incredibly simple tasks.

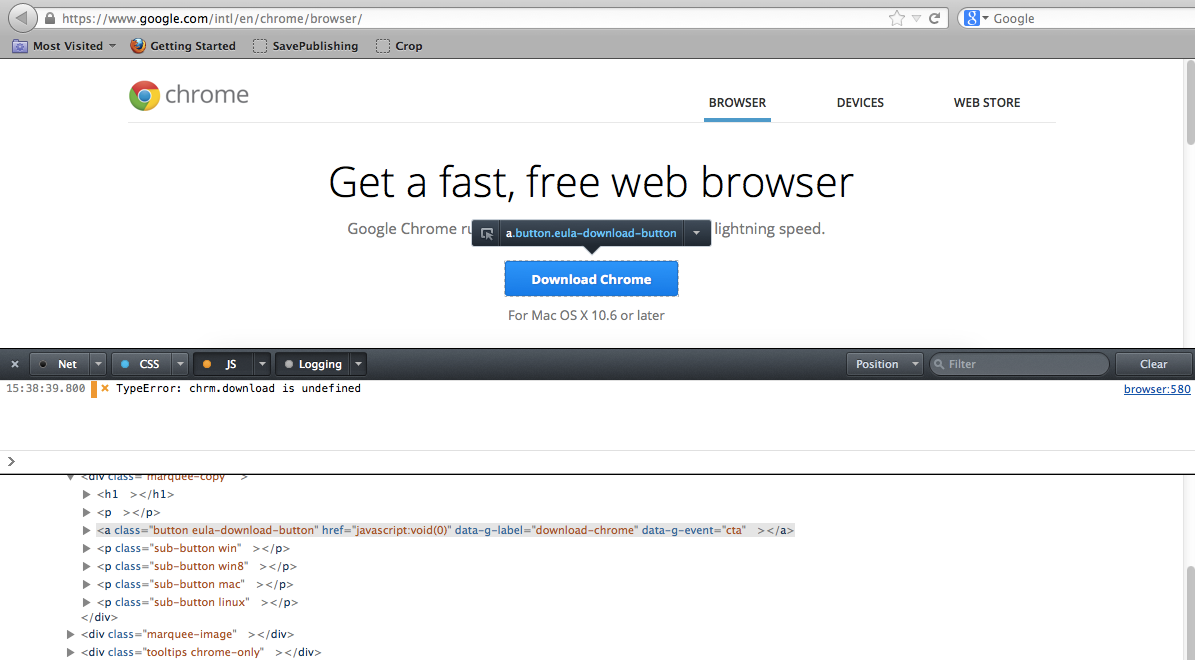

Google experienced that the other day: because of a JavaScript error the download page of Chrome was down for a whole day. Clicking the button to show a terms and conditions page only resulted in a JavaScript error and that was that. No downloads for Chrome that day.

As Jake Archibald once put it:

“All your users have JavaScript turned off until the first script is loaded and runs”

This applies to all dependencies we have, be they libraries, build scripts, server configuration, syntactical sugar to make a programming task easier and less keystrokes. We tell ourselves that we make it easier for people to build things but we are adding to a landfill of dependencies that are not going to go away any time soon.

b2. Dev: “It works on my machine, just not on the server.” Me: “Ok, backup your mail. We’re putting your laptop into production.” Oisin Grehan on Twitter

There is no perfect plugin, there is no flawless code. In a lot of cases we start having to debug the library or the plugin itself. Which is good if we file pull requests that get implemented, but looking at libraries and open issues with plugins, this is not the case a lot of times. Debugging a plugin is much harder than debugging a script that directly interacts with the browser as developer tools need to navigate around the abstractions.

Web obesity

The other unintended effect is that we add to web obesity. By reducing functionality to a simple line of code and two JavaScript includes we didn’t only make it easier for people to achieve a certain effect – we also fostered a way of working to add more and more stuff to create a final solution without thinking or knowing about the parts we use. It is not uncommon to see web sites that are huge and use various libraries as they liked one widget built on one and another built on another.

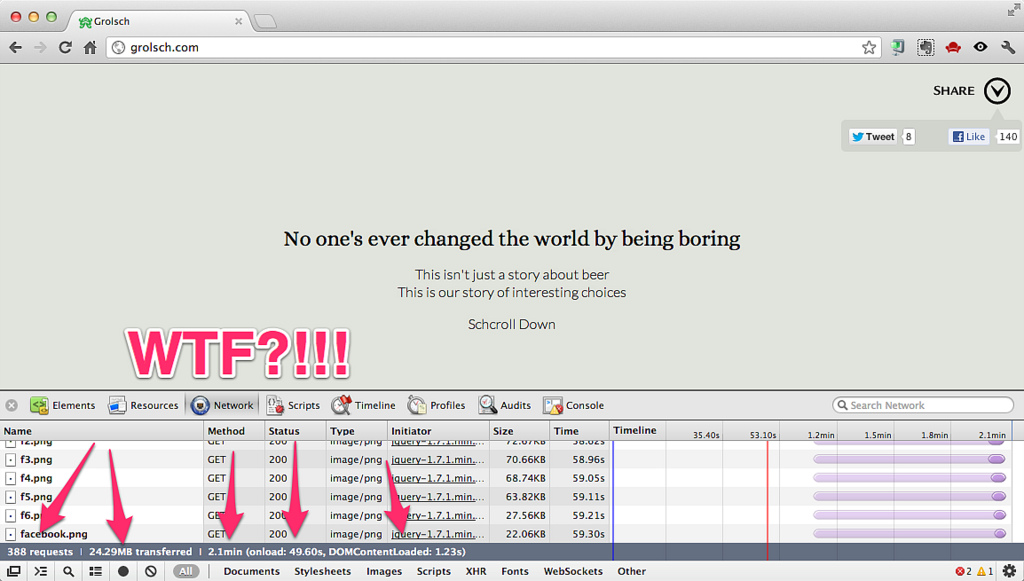

This leads to ridiculously large pages like Grolsch.com which clocks in at 388 HTTP requests with 24.29MB traffic:

What we have there is a 1:1 conversion of a Flash site to an HTML5/CSS3 site without any understanding that the benefit of Flash for brochureware sites like these full of video and large imagery was that it can stream all this. Instead of benefiting from offline storage sites like this one just add plugin after plugin to achieve effects. The visual outcome is more important than the structure or maintainability or – even worse – the final experience for the end user. You can predict that any of these sites start with the best of intentions but when deadlines loom and the budget runs out the cutting happens in Q&A and by defining a “baseline of supported browsers and platforms”.

Time for a web diet

In other words: this will break soon, and all the work was for a short period of time. Because our market doesn’t stagnate, it moves on. What is now a baseline will be an anachronism in a year’s time. We are going into a market of thin clients on mobile devices, set-top boxes and TV sets.

In essence, we are in quite a pickle: we promised a whole new generation of developers that everything works for the past and the now and suddenly the future is upon us. The “very small helper library” on desktop is slow on mobiles and loading it over a 3G connection is not fun. To me, this means we need to change our pace and re-focus on what is important.

Our libraries, tools and scripts should help developers to build things faster and not worry about differences in browsers. This means we should be one step ahead of the market with our tools and not patch for the past.

What does that mean? A few things

- Stop building for the past – using a library should not be an excuse for a company to use outdated browsers. This hurts the web and is a security issue above everything else. No, IE6 doesn’t get any smooth animations – you can not promise that unless you spend most of your time testing in it.

- Let browsers do what they do best – animations and transitions are now available in CSS, hardware-accelerated and rendering-optimised. Every time you use

animate()you simulate that – badly. - Componentise libraries – catch-all libraries allowing people to do anything lead to a thinking that you need to use everything. It also means that libraries themselves get bloated and hard to debug

- Build solid bases with optional add-ons – we have more than enough image carousel libraries out there. Most of them don’t get used as they do too many things at once. Instead of covering each and every possible visual outcome, build plugins that do one thing well and can be extended, then collaborate

- Fix and apply fixes – both the pull request and issue queues of libraries and plugins are full of unloved feedback and demands. These need to go away. Of course it is boring to fix issues, but it beats adding another erroneous solution to the already existing pile

- Know the impact, don’t focus on the outcome – far too many of our solutions show how to achieve a certain visual effect and damn the consequences. It is time we stop chasing the shiny and give people tools that don’t hurt overall performance

Here’s the good news: by writing tools over the last few years that allowed people to quickly build things, we got quite a following – especially in the jQuery world. This means that we can fix things at the source and go with two parallel approaches: improving what is currently used and adding new functionality that works fine on mobile devices in our next generation of plugins and solutions. Performance is the main key – what we build should not slow down computers or needlessly use up battery and memory.

There are a few simple things to do to achieve that:

- Use CSS when you can – for transitions and animations. Generate them dynamically if needed. Zepto.js does a good job at that and cssanimations.js shows how it is done without the rest of the library

requestAnimationFramebeatssetInterval/setTimeout– if we use it, animations happen when the browser is ready to show them, not when we force it to apply them without any outcome other than keeping the processor busy- Transforms and translate beat absolute positioning – they are hardware accelerated and you can easily add other effects like rotation and scaling

- Fingers off the DOM – by far the biggest drain in performance is DOM access. This is unfortunate as jQuery was more or less made to make access to the DOM easier. Make sure to cache your DOM access results and batch DOM changes that cause reflows. Re-use DOM elements instead of creating new ones and adding to a large DOM tree

- Think touch before click – touch events on mobile devices happen much quicker than click events as click needs delaying to allow for double-tap to zoom. Check for touch support and add if needed.

- Web components are coming – with which we can get rid of lots of bespoke widgets we built in jQuery and other libraries. Web components have the benefit of being native browser controls with much better performance. Think of all the things you can see in a video player shown for a video element. Now think that you could control all of those

Right now the mobile web looks to me a lot like Temple Run – there is a lot of gold lying around to be taken but there are lots of stumbling blocks, barriers and holes in the road. Our tools and solutions should patch these holes and make the barriers easy to jump over, not become stumbling blocks in and of themselves.